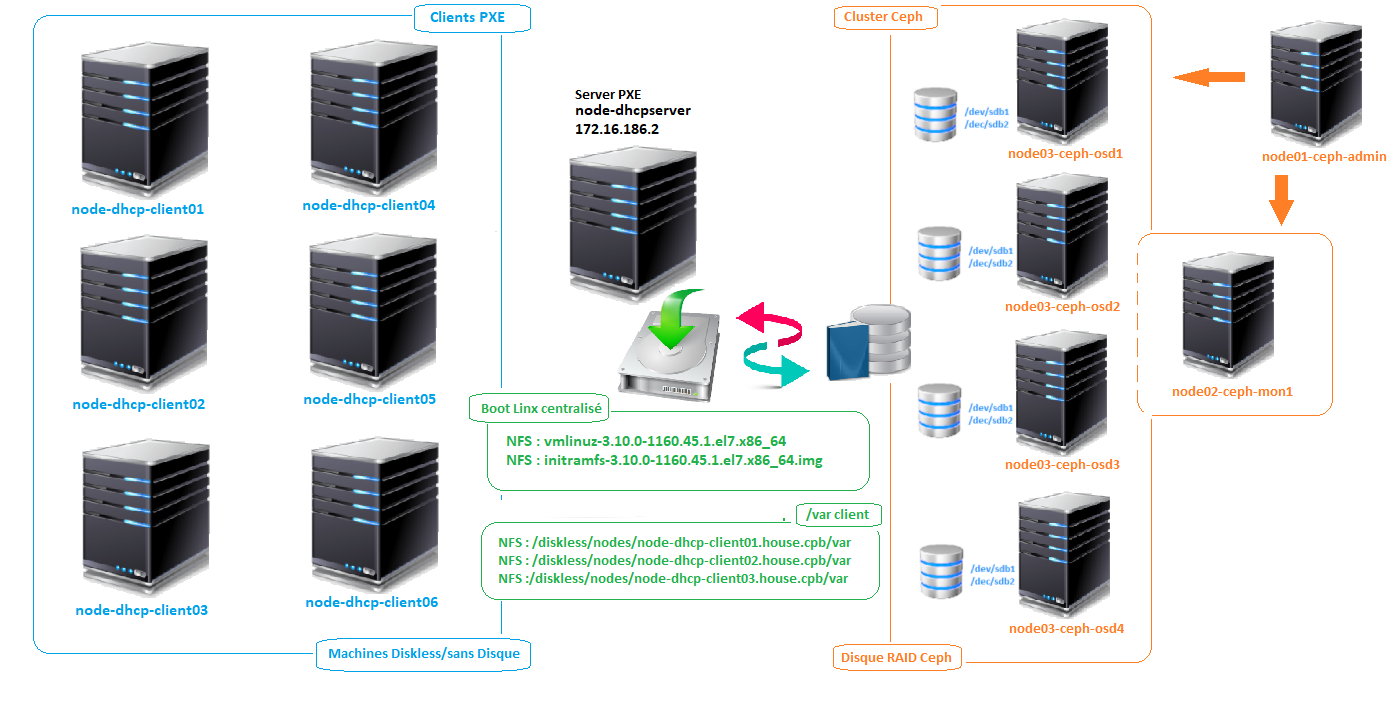

Le principe

Le but

Faire démarrer des machines Linux sans disque en centralisant les fichiers « bootloader » sur un cluster OSD de machine CEPH et cela afin de rendre le service Diskless moins permissible aux pannes disques.

Toutes les machines auront une IP centralisée via la machine node-dhcp-pce.

Inventaire des machines de notre MODOP

Un Cluster Ceph

- Node01-ceph-admin

- Node02-ceph-mon1

- Node03-ceph-osd1

- Node04-ceph-osd2

- Node05-ceph-osd3

- Node06-ceph-osd4

Un serveur PXE

- node-dhcp-pxe

Des clients diskless

- node-dhcp-client01

- node-dhcp-client02

- node-dhcp-client03

- node-dhcp-client04

- node-dhcp-client05

- node-dhcp-client06

- Installation du service DHCP sur node-dhcp-pxe

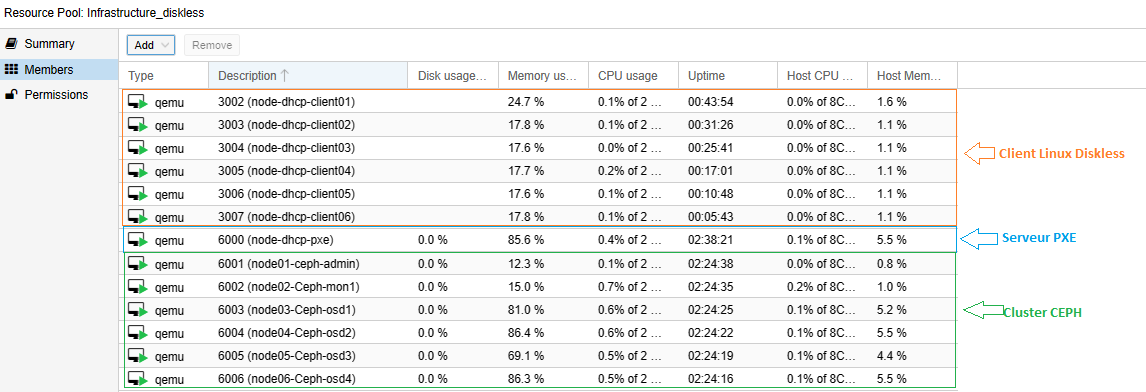

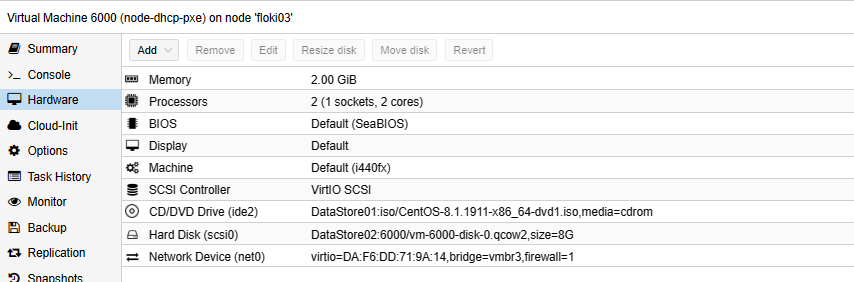

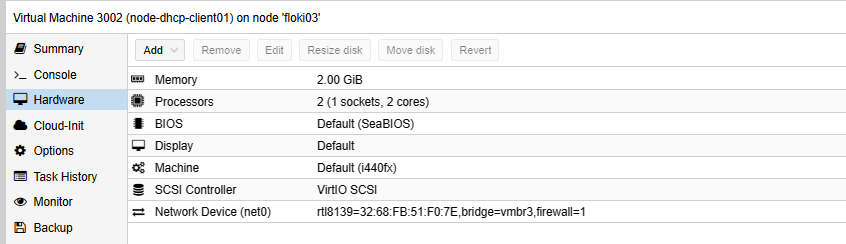

Spécification de la machine

- vCPU : 2

- RAM : 2Go

- Disk : 8GO

- Network : 172.16.186.2

- OS Linux : Centos7

1°) Mise à jour de la machine pxe

[root@node-dhcp-pxe ~]# yum -y update

2°) Désactiver SELinux

[root@node-dhcp-pxe ~]# sed -i ‘s/SELINUX=enforcing/SELINUX=disabled/g’ /etc/selinux/config

[root@node-dhcp-pxe ~]# cat /etc/selinux/config |grep SELINUX SELINUX=disabled

[root@node-dhcp-pxe ~]# reboot

3°) Installation/Configuration DHCP

[root@node-dhcp-pxe ~]# yum install dhcp

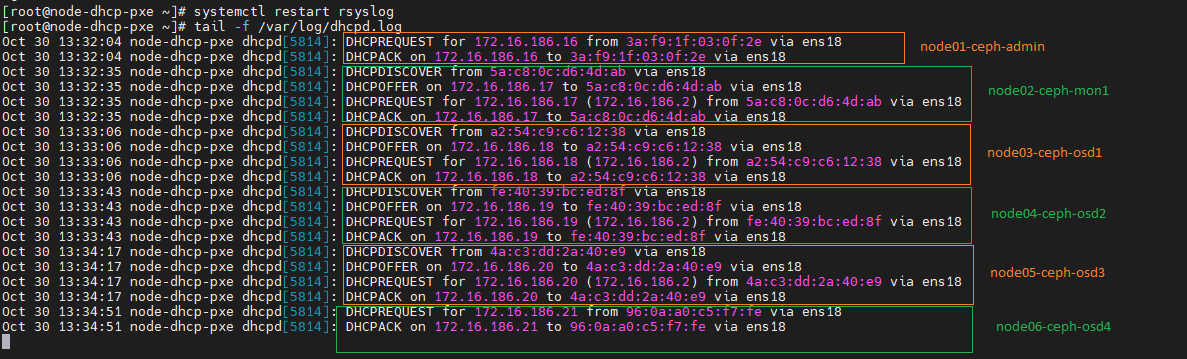

Liste des MAC ADDRESS ⬄ IP Statique

- node-dhcp-client01 : 32:68:FB:51:F0:7E => 172.16.186.10

- node-dhcp-client02 : 8A:3E:96:91:BB:E6 => 172.16.186.11

- node-dhcp-client03 : 06:86:1D:34:F2:69 => 172.16.186.12

- node-dhcp-client04 : 8E:2F:3B:D5:70:BC => 172.16.186.13

- node-dhcp-client05 : 1E:65:A2:89:4E:E4 => 172.16.186.14

- node-dhcp-client06 : EE:B7:EC:20:CE:CA => 172.16.186.15

- node01-ceph-admin : 3A:F9:1F:03:0F:2E => 172.16.186.16

- node02-ceph-mon1 : 5A:C8:0C:D6:4D:AB => 172.16.186.17

- node03-ceph-osd1 : A2:54:C9:C6:12:38 => 172.16.186.18

- node04-ceph-osd2 : FE:40:39:BC:ED:8F => 172.16.186.19

- node05-ceph-osd3 : 4A:C3:DD:2A:40:E9 => 172.16.186.20

- node06-ceph-osd4 : 96:0A:A0:C5:F7:FE => 172.16.186.21

[root@node-dhcp-pxe ~]# vi /etc/dhcp/dhcpd.conf

#### Fichier de conf DHCP Server

option domain-name "house.cpb";

option domain-name-servers 192.168.1.1;

# Bail de 24H

default-lease-time 86400;

# Bail maxi de 48H

max-lease-time 172800;

# Définition du niveau de Log

log-facility local7;

allow booting;

allow bootp;

option time-offset -18000;

#Definition du réseau Subnet 172.16.186.0/24 dont va servir notre machine

subnet 172.16.186.0 netmask 255.255.255.0 {

# Passerelle/Gateway

option routers 172.16.186.201;

option subnet-mask 255.255.255.0;

##############################################################

# Machines du Cluster CEPH

##############################################################

#Machine node01-ceph-admin

host node01-ceph-admin {

option host-name "node01-ceph-admin";

hardware ethernet 3A:F9:1F:03:0F:2E;

fixed-address 172.16.186.16;

}

#Machine node02-ceph-mon1

host node02-ceph-mon1 {

option host-name "node02-ceph-mon1";

hardware ethernet 5A:C8:0C:D6:4D:AB;

fixed-address 172.16.186.17;

}

#Machine node03-ceph-osd1

host node03-ceph-osd1 {

option host-name "node03-ceph-os1";

hardware ethernet A2:54:C9:C6:12:38;

fixed-address 172.16.186.18;

}

#Machine node04-ceph-osd2

host node04-ceph-osd2 {

option host-name "node04-ceph-osd2";

hardware ethernet FE:40:39:BC:ED:8F;

fixed-address 172.16.186.19;

}

#Machine node05-ceph-osd3

host node05-ceph-osd3 {

option host-name "node05-ceph-osd3";

hardware ethernet 4A:C3:DD:2A:40:E9;

fixed-address 172.16.186.20;

}

#Machine node06-ceph-osd4

host node06-ceph-osd4 {

option host-name "node06-ceph-osd4";

hardware ethernet 96:0A:A0:C5:F7:FE;

fixed-address 172.16.186.21;

}

##############################################################

# Machines Clientes Diskless

##############################################################

#Machine Client01

host node-dhcp-client01 {

option host-name "node-dhcp-client01";

hardware ethernet 32:68:FB:51:F0:7E;

fixed-address 172.16.186.10;

}

#Machine Client02

host node-dhcp-client02 {

option host-name "node-dhcp-client02";

hardware ethernet 8A:3E:96:91:BB:E6;

fixed-address 172.16.186.11;

}

#Machine Client03

host node-dhcp-client03 {

option host-name "node-dhcp-client03";

hardware ethernet 06:86:1D:34:F2:69;

fixed-address 172.16.186.12;

}

#Machine Client04

host node-dhcp-client04 {

option host-name "node-dhcp-client04";

hardware ethernet 8E:2F:3B:D5:70:BC;

fixed-address 172.16.186.13;

}

#Machine Client05

host node-dhcp-client05 {

option host-name "node-dhcp-client05";

hardware ethernet 1E:65:A2:89:4E:E4;

fixed-address 172.16.186.14;

}

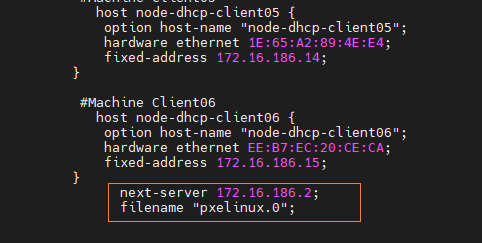

#Machine Client06

host node-dhcp-client06 {

option host-name "node-dhcp-client06";

hardware ethernet EE:B7:EC:20:CE:CA;

fixed-address 172.16.186.15;

}

}

[root@node-dhcp-pxe ~]# systemctl enable dhcpd [root@node-dhcp-pxe ~]# systemctl start dhcpd

Rules Firewall DHCP

[root@node-dhcp-pxe ~]# firewall-cmd --permanent --zone=public --add-service=dhcp [root@node-dhcp-pxe ~]# firewall-cmd --reload

Supprimer l’IPv6

[root@node-dhcp-pxe ~]# vi /etc/sysctl.conf net.ipv6.conf.all.disable_ipv6 = 1 net.ipv6.conf.all.autoconf = 0 net.ipv6.conf.default.disable_ipv6 = 1 net.ipv6.conf.default.autoconf = 0

[root@node-dhcp-pxe ~]# sysctl -p

Check des attributions d’IP lors du démarrage des machines CEPH

Si vous n’avez pas de DNS

[root@node-dhcp-pxe ~]# vi /etc/hosts 172.16.186.2 node-dhcp-pxe node-dhcp-pxe.house.cpb 172.16.186.10 node-dhcp-client01 node-dhcp-client01.house.cpb 172.16.186.11 node-dhcp-client02 node-dhcp-client02.house.cpb 172.16.186.12 node-dhcp-client03 node-dhcp-client03.house.cpb 172.16.186.13 node-dhcp-client04 node-dhcp-client04.house.cpb 172.16.186.14 node-dhcp-client05 node-dhcp-client06.house.cpb 172.16.186.15 node-dhcp-client06 node-dhcp-client06.house.cpb 172.16.186.16 node01-ceph-admin node01-ceph-admin.house.cpb 172.16.186.17 node02-ceph-mon1 node02-ceph-mon1.house.cpb 172.16.186.18 node03-ceph-osd1 node03-ceph-osd1.house.cpb 172.16.186.19 node04-ceph-osd2 node04-ceph-osd2.house.cpb 172.16.186.20 node05-ceph-osd3 node05-ceph-osd3.house.cpb 172.16.186.21 node06-ceph-osd4 node06-ceph-osd4.house.cpb

[root@node-dhcp-pxe ~]# scp /etc/hosts root@node01-ceph-admin:/etc/hosts [root@node-dhcp-pxe ~]# scp /etc/hosts root@node02-ceph-mon1:/etc/hosts [root@node-dhcp-pxe ~]# scp /etc/hosts root@node03-ceph-osd1:/etc/hosts [root@node-dhcp-pxe ~]# scp /etc/hosts root@node04-ceph-osd2:/etc/hosts [root@node-dhcp-pxe ~]# scp /etc/hosts root@node05-ceph-osd3:/etc/hosts [root@node-dhcp-pxe ~]# scp /etc/hosts root@node06-ceph-osd4:/etc/hosts

Installation d’un Cluster CEPH

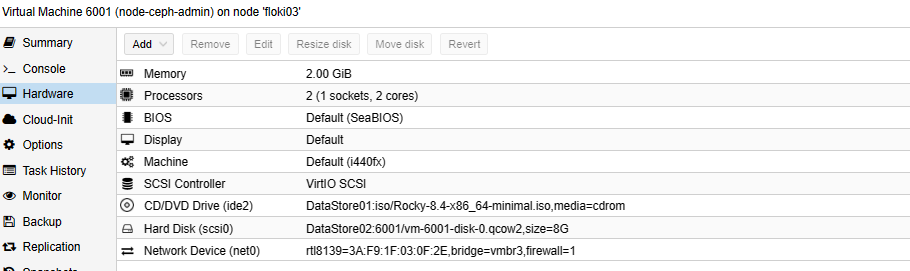

1°) Spécification des machines

Administration et Moniteur

node01-Ceph-admin

- IP : 172.16.186.16 (DHCP)

- Disque 1 – Système 8Go

- RAM 2G

- 2vCPU

- OS Linux : Centos7

node02-Ceph-mon1

- IP : 172.16.186.17 (DHCP)

- Disque 1 – Système 8Go

- RAM 2G

- 2vCPU

- OS Linux : Centos7

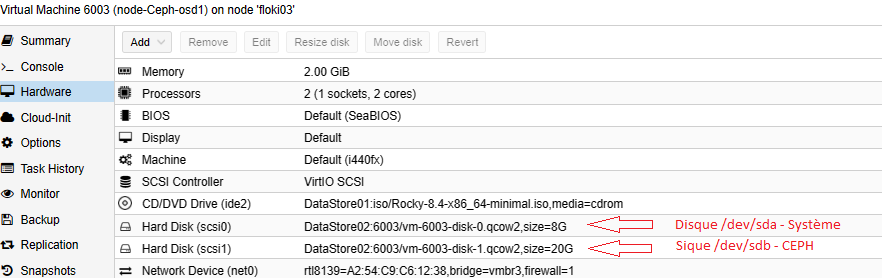

Partie OSD Disque CEPH

node03-Ceph-osd1

- IP : 172.16.186.18 (DHCP)

- Disque 1 – Système 8Go

- Disque 2 – Ceph 20Go

- RAM 2G

- 2vCPU

- OS Linux : Centos7

node04-Ceph-osd2

- IP : 172.16.186.19 (DHCP)

- Disque 1 – Système 8Go

- Disque 2 – Ceph 20Go

- RAM 2G

- 2vCPU

- OS Linux : Centos7

node05-Ceph-osd3

- IP : 172.16.186.20 (DHCP)

- Disque 1 – Système 8Go

- Disque 2 – Ceph 20Go

- RAM 2G

- 2vCPU

- OS Linux : Centos7

node06-Ceph-osd4

- IP : 172.16.186.21 (DHCP)

- Disque 1 – Système 8Go

- Disque 2 – Ceph 20Go

- RAM 2G

- 2vCPU

- OS Linux : Centos7

2°) Installation des middlewares (6 nœuds ceph)

[root@node01-ceph-admin ~]# yum update -y [root@node01-ceph-admin ~]# yum install -y nmap net-tools wget

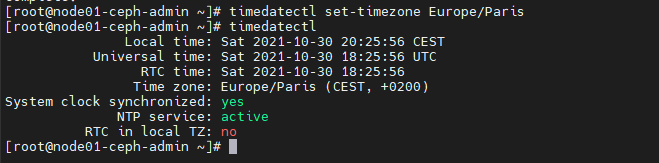

3°) Installation ntpd (6 nœuds)

[root@node01-ceph-admin ~]# timedatectl set-timezone Europe/Paris [root@node01-ceph-admin ~]# timedatectl

4°) Désactivation Selinux (6 nœuds)

[root@node01-ceph-admin ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@node01-ceph-admin ~]# reboot

5°) Création d’un utilisateur ceph (6 nœuds ceph)

[root@node01-ceph-admin ~]# useradd -d /home/cephuser -m cephuser [root@node01-ceph-admin ~]# passwd cephuser [root@node01-ceph-admin ~]# echo “cephuser ALL = (root) NOPASSWD:ALL” >> /etc/sudoers.d/cephuser [root@node01-ceph-admin ~]# chmod 0440 /etc/sudoers.d/cephuser [root@node01-ceph-admin ~]# sed -i s’/Defaults requiretty/#Defaults requiretty’/g /etc/sudoers

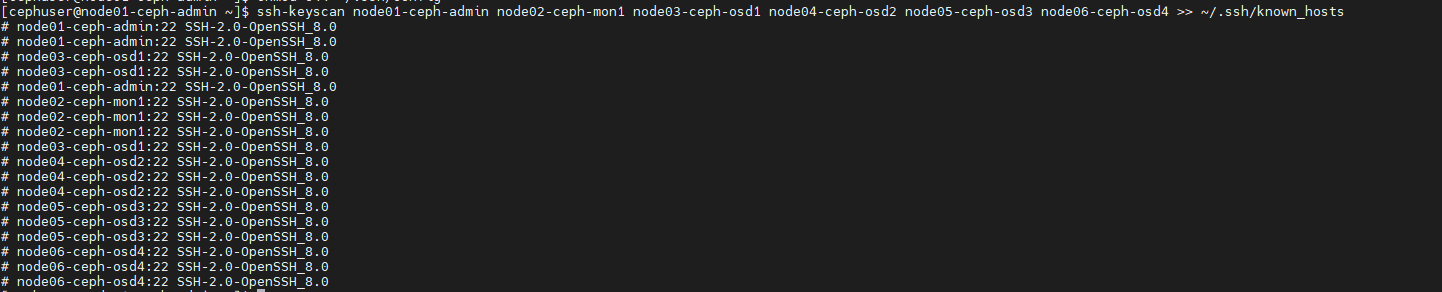

6°) Connexion SSH (node01-Ceph-admin)

générer une clef SSH pour cephuser (ssh-keygen)

[cephuser@node01-ceph-admin ~]$ su - cephuser [cephuser@node01-ceph-admin ~]$ ssh-keygen

Création config SSH pour les nodes

[cephuser@node01-ceph-admin ~]$ vi ~/.ssh/config Host node01-ceph-admin Hostname node01-ceph-admin User cephuser Host node02-ceph-mon1 Hostname node02-ceph-mon1 User cephuser Host node03-ceph-osd1 Hostname node03-ceph-osd1 User cephuser Host node04-ceph-osd2 Hostname node04-ceph-osd2 User cephuser Host node05-ceph-osd3 Hostname node05-ceph-osd3 User cephuser Host node06-ceph-osd4 Hostname node06-ceph-osd4 User cephuser

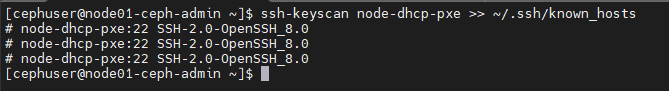

[cephuser@node01-ceph-admin ~]$ chmod 644 ~/.ssh/config [cephuser@node01-ceph-admin ~]$ ssh-keyscan node01-ceph-admin node02-ceph-mon1 node03-ceph-osd1 node04-ceph-osd2 node05-ceph-osd3 node06-ceph-osd4 >> ~/.ssh/known_hosts

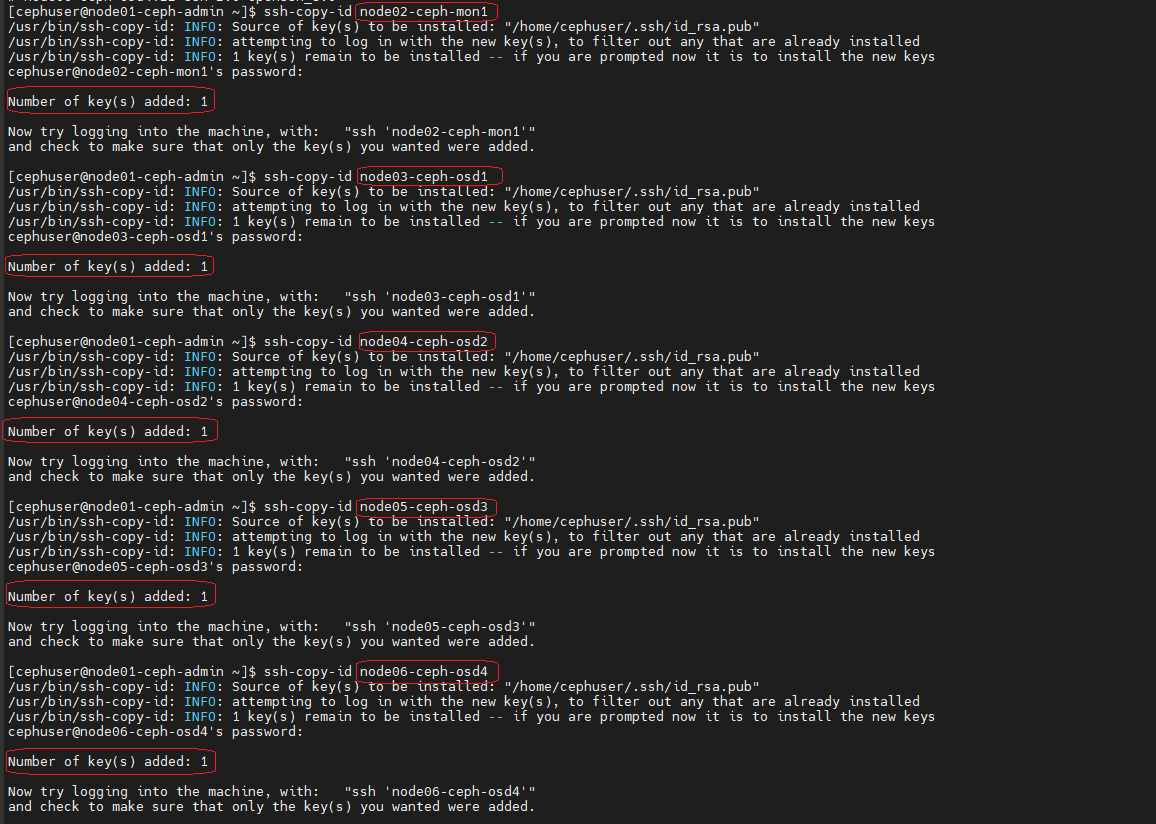

Copie des Clefs SSH sur les Machines CEPH

[cephuser@node01-ceph-admin ~]$ ssh-copy-id node02-ceph-mon1 [cephuser@node01-ceph-admin ~]$ ssh-copy-id node03-ceph-osd1 [cephuser@node01-ceph-admin ~]$ ssh-copy-id node04-ceph-osd2 [cephuser@node01-ceph-admin ~]$ ssh-copy-id node05-ceph-osd3 [cephuser@node01-ceph-admin ~]$ ssh-copy-id node06-ceph-osd4

7°) Configurer les Rules Firewall

node01-Ceph-admin

[root@node01-ceph-admin ~]# systemctl start firewalld && systemctl enable firewalld [root@node01-ceph-admin ~]# firewall-cmd --zone=public --add-port=80/tcp --permanent [root@node01-ceph-admin ~]# firewall-cmd --zone=public --add-port=2003/tcp --permanent [root@node01-ceph-admin ~]# firewall-cmd --zone=public --add-port=4505-4506/tcp --permanent [root@node01-ceph-admin ~]# firewall-cmd --reload [root@node01-ceph-admin ~]# firewall-cmd --list-port 80/tcp 2003/tcp 4505-4506/tcp

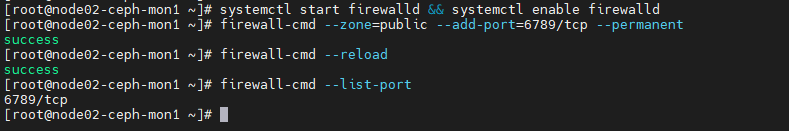

node02-Ceph-mon1

[root@node02-ceph-mon1 ~]# systemctl start firewalld && systemctl enable firewalld [root@node02-ceph-mon1 ~]# firewall-cmd --zone=public --add-port=6789/tcp --permanent [root@node02-ceph-mon1 ~]# firewall-cmd --reload [root@node02-ceph-mon1 ~]# firewall-cmd --list-port 6789/tcp

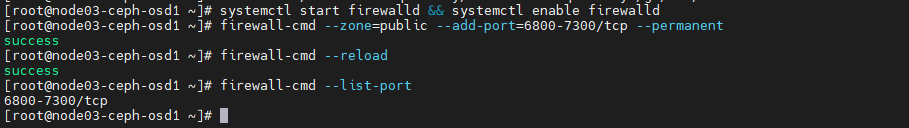

node03-Ceph-osd1, node04-Ceph-osd2, node04-Ceph-osd3, node05-Ceph-osd4

[root@node03-ceph-osd1 ~]# systemctl start firewalld && systemctl enable firewalld [root@node03-ceph-osd1 ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent [root@node03-ceph-osd1 ~]# firewall-cmd --reload [root@node03-ceph-osd1 ~]# firewall-cmd --list-port 6800-7300/tcp

[root@node04-ceph-osd2 ~]# systemctl start firewalld && systemctl enable firewalld [root@node04-ceph-osd2 ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent && firewall-cmd --reload

[root@node05-ceph-osd3 ~]# systemctl start firewalld && systemctl enable firewalld [root@node05-ceph-osd3 ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent && firewall-cmd --reload

[root@node06-ceph-osd4 ~]# systemctl start firewalld && systemctl enable firewalld [root@node06-ceph-osd4 ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent && firewall-cmd --reload

8°) Partitionner le disque Ceph des noeuds OSD

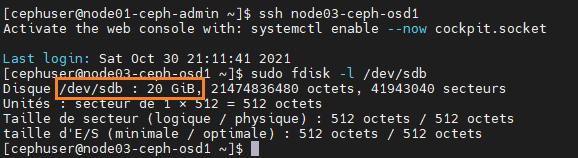

node03-ceph-osd1

[root@node01-ceph-admin ~]$ su - cephuser [cephuser@node01-ceph-admin ~]$ ssh node03-ceph-osd1 [cephuser@node03-ceph-osd1 ~]$ sudo fdisk -l /dev/sdb

[cephuser@node03-ceph-osd1 ~]$ sudo parted -s /dev/sdb mklabel gpt mkpart primary xfs 0% 100% [cephuser@node03-ceph-osd1 ~]$ sudo mkfs.xfs /dev/sdb -f

[cephuser@node03-ceph-osd1 ~]$ sudo blkid -o value -s TYPE /dev/sdb xfs

node04-ceph-osd2

[cephuser@node01-ceph-admin ~]$ ssh node04-ceph-osd2 [cephuser@node04-ceph-osd2 ~]$ sudo parted -s /dev/sdb mklabel gpt mkpart primary xfs 0% 100% [cephuser@node04-ceph-osd2 ~]$ sudo mkfs.xfs /dev/sdb -f [cephuser@node04-ceph-osd2 ~]$ sudo blkid -o value -s TYPE /dev/sdb xfs

node05-ceph-osd3

[cephuser@node01-ceph-admin ~]$ ssh node05-ceph-osd3 [cephuser@node05-ceph-osd3 ~]$ sudo parted -s /dev/sdb mklabel gpt mkpart primary xfs 0% 100% [cephuser@node05-ceph-osd3 ~]$ sudo mkfs.xfs /dev/sdb -f [cephuser@node05-ceph-osd3 ~]$ sudo blkid -o value -s TYPE /dev/sdb xfs

node06-ceph-osd4

[cephuser@node01-ceph-admin ~]$ ssh node06-ceph-osd4 [cephuser@node06-ceph-osd4 ~]$ sudo parted -s /dev/sdb mklabel gpt mkpart primary xfs 0% 100% [cephuser@node06-ceph-osd4 ~]$ sudo mkfs.xfs /dev/sdb -f [cephuser@node06-ceph-osd4 ~]$ sudo blkid -o value -s TYPE /dev/sdb xfs

9°) Création et Déploiement du Cluster

Démarrer le Cluster (node-ceph-admin)

[root@node01-ceph-admin ~]# su - cephuser [cephuser@node01-ceph-admin ~]$ mkdir cluster

Installation ceph-deploy (node-ceph-admin)

[cephuser@node01-ceph-admin ~]$ sudo rpm -Uhv http://download.ceph.com/rpm-jewel/el7/noarch/ceph-release-1-1.el7.noarch.rpm [cephuser@node01-ceph-admin ~]$ sudo yum update -y && sudo yum install ceph-deploy -y [cephuser@node01-ceph-admin ~]$ cd cluster/

Création du moniteur « node02-ceph-mon1 » (node-ceph-admin)

[cephuser@node01-ceph-admin cluster]$ ceph-deploy new node02-ceph-mon1

[cephuser@node01-ceph-admin cluster]$ : vi ceph.conf Ajouter à la fin du fichier # Public address public network = 172.16.186.0/24 osd pool default size = 2

Installation de ceph sur tous les nœuds du Cluster via ceph-deploy

[cephuser@node01-ceph-admin cluster]$ ceph-deploy install node01-ceph-admin node02-ceph-mon1 node03-ceph-osd1 node04-ceph-osd2 node05-ceph-osd3 node06-ceph-osd4

Déployer le moniteur Monitoring sur le Cluster (mon)

[cephuser@node01-ceph-admin cluster]$ ceph-deploy mon create-initial

[cephuser@node01-ceph-admin cluster]$ ceph-deploy gatherkeys node02-ceph-mon1

Déployer les disques /dev/sdb – nœuds OSD – sur le Cluster

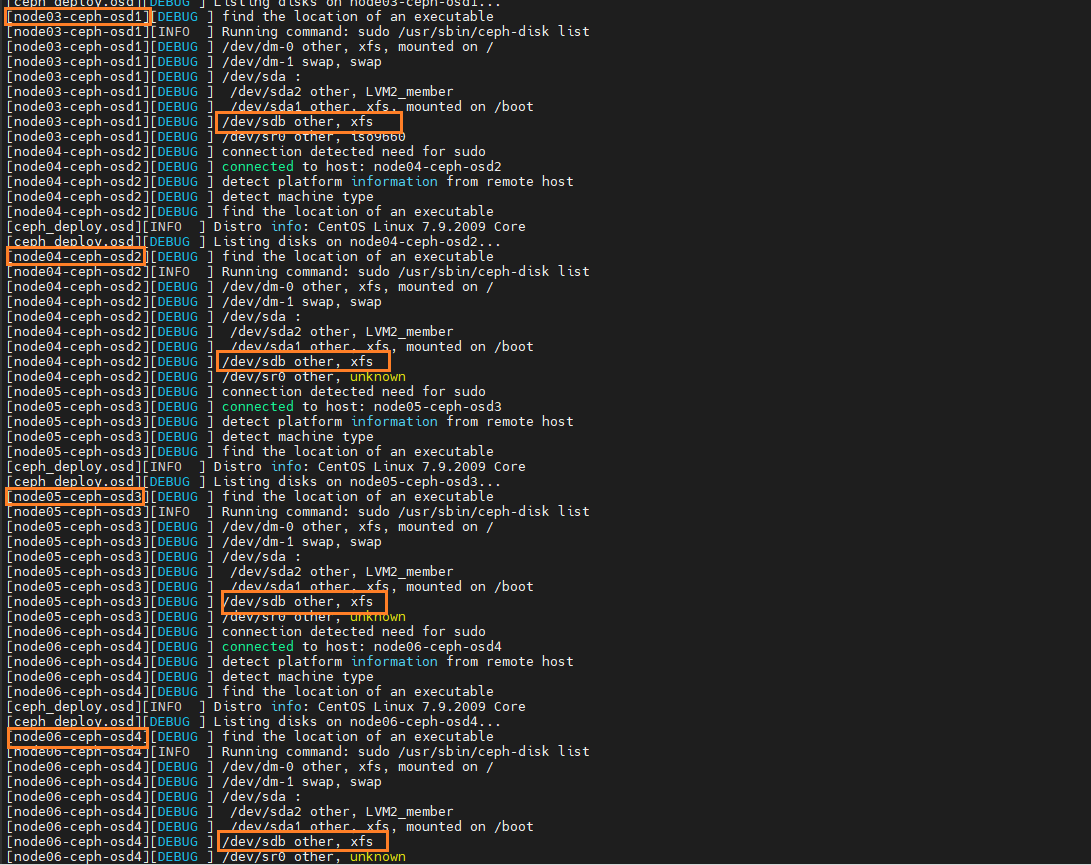

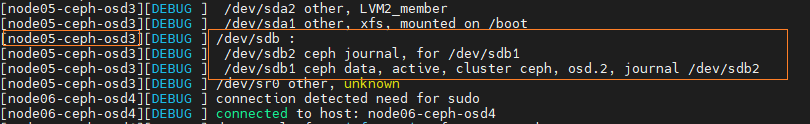

[cephuser@node01-ceph-admin cluster]$ ceph-deploy disk list node03-ceph-osd1 node04-ceph-osd2 node05-ceph-osd3 node06-ceph-osd4

Préparer les disques /dev/sdb – nœuds OSD – sur le Cluster

[cephuser@node01-ceph-admin cluster]$ ceph-deploy disk zap node03-ceph-osd1:/dev/sdb node04-ceph-osd2:/dev/sdb node05-ceph-osd3:/dev/sdb node06-ceph-osd4:/dev/sdb

[cephuser@node01-ceph-admin cluster]$ ceph-deploy osd prepare node03-ceph-osd1:/dev/sdb node04-ceph-osd2:/dev/sdb node05-ceph-osd3:/dev/sdb node06-ceph-osd4:/dev/sdb

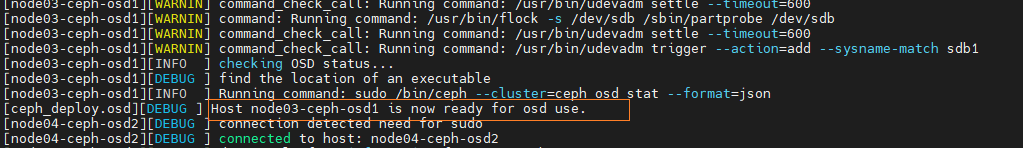

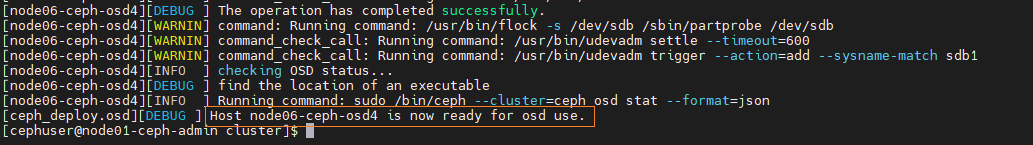

Activation de la partition /dev/sdb1 des nœuds OSD

[cephuser@node01-ceph-admin cluster]$ ceph-deploy osd activate node03-ceph-osd1:/dev/sdb1 node04-ceph-osd2:/dev/sdb1 node05-ceph-osd3:/dev/sdb1 node06-ceph-osd4:/dev/sdb1

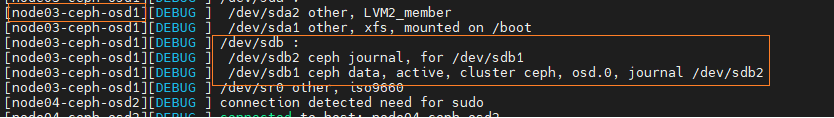

Liste des partitions des nœuds OSD

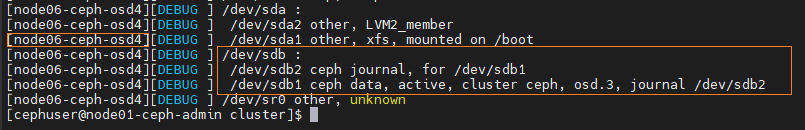

Déployer Admin sur l’ensemble du Cluster

[cephuser@node01-ceph-admin cluster]$ ceph-deploy admin node01-ceph-admin node02-ceph-mon1 node03-ceph-osd1 node04-ceph-osd2 node05-ceph-osd3 node06-ceph-osd4

[cephuser@node01-ceph-admin cluster]$ sudo chmod 644 /etc/ceph/ceph.client.admin.keyring

Check le Cluster CEPH

[cephuser@node01-ceph-admin cluster]$ ceph health HEALTH_OK

[cephuser@node01-ceph-admin cluster]$ ceph -s

Monter le Disk CEPH sur la machine « node-dhcp-pxe »

node-dhcp-pxe

[root@node-dhcp-pxe ~]# useradd -d /home/cephuser -m cephuser [root@node-dhcp-pxe ~]# passwd cephuser [root@node-dhcp-pxe ~]# echo “cephuser ALL = (root) NOPASSWD:ALL” >> /etc/sudoers.d/cephuser [root@node-dhcp-pxe ~]# chmod 0440 /etc/sudoers.d/cephuser [root@node-dhcp-pxe ~]# sed -i s’/Defaults requiretty/#Defaults requiretty’/g /etc/sudoers

node-ceph-admin

Ajouter node-dhcp-pxe à la config SSH de node-ceph-admin

[root@node01-ceph-admin ~]# su - cephuser [cephuser@node01-ceph-admin ~]$ vi ~/.ssh/config #Ajouter à la fin du fichier Host node-dhcp-pxe Hostname node-dhcp-pxe User cephuser

[cephuser@node01-ceph-admin ~]$ chmod 644 ~/.ssh/config

Déployer la clef ssh

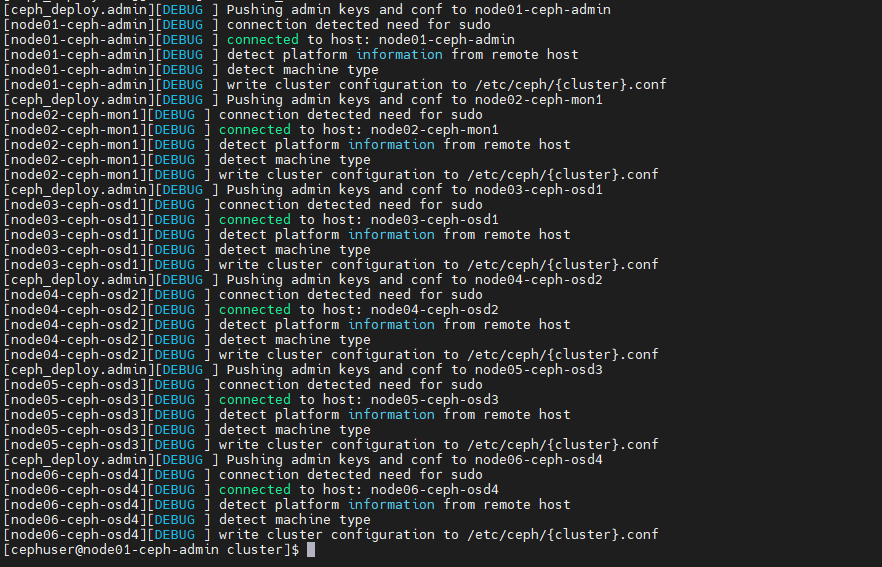

[cephuser@node01-ceph-admin ~]$ ssh-keyscan node-dhcp-pxe >> ~/.ssh/known_hosts

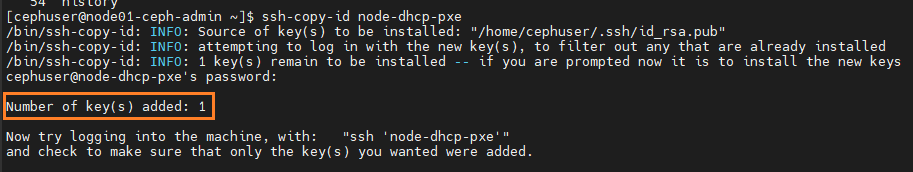

[cephuser@node01-ceph-admin ~]$ ssh-copy-id node-dhcp-pxe

Déployer l’installation de Ceph sur le Client

[root@node01-ceph-admin ~]# su - cephuser [cephuser@node01-ceph-admin ~]$ cd cluster [cephuser@node01-ceph-admin cluster]$ ceph-deploy install node-dhcp-pxe

Déployer le cluster Conf

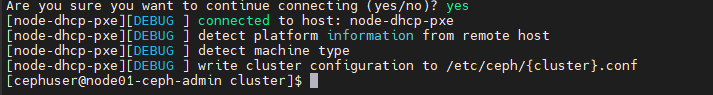

[cephuser@node01-ceph-admin cluster]$ ceph-deploy admin node-dhcp-pxe

[cephuser@node01-ceph-admin cluster]$ ssh node-dhcp-pxe [cephuser@node-dhcp-pxe ~]$ sudo chmod 644 /etc/ceph/ceph.client.admin.keyring

Montage du disque sur node-dhcp-pxe

node-dhcp-pxe

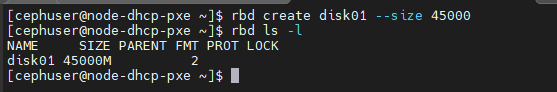

[cephuser@node01-ceph-admin cluster]$ ssh node-dhcp-pxe [cephuser@node-dhcp-pxe ~]$ rbd create disk01 --size 45000 [cephuser@node-dhcp-pxe ~]$ rbd ls -l

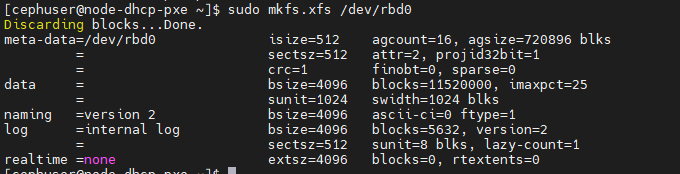

[cephuser@node-dhcp-pxe ~]$ sudo modprobe rbd [cephuser@node-dhcp-pxe ~]$ sudo rbd feature disable disk01 exclusive-lock object-map fast-diff deep-flatten [cephuser@node-dhcp-pxe ~]$ sudo rbd map disk01 /dev/rbd0 [cephuser@node-dhcp-pxe ~]$ rbd showmapped id pool image snap device 0 rbd disk01 - /dev/rbd0 [cephuser@node-dhcp-pxe ~]$ sudo mkfs.xfs /dev/rbd0

[cephuser@node-dhcp-pxe ~]$ sudo mkdir -p /diskless

Création Script Mount /dev/rbd0 au boot Machine

[cephuser@node-dhcp-pxe ~]$ cd /usr/local/bin/

[cephuser@node-dhcp-pxe bin]$ sudo vi rbd-mount #!/bin/bash # Nom du Pool de Disque OSD export poolname=rbd # nom du disque rbd export rbdimage=disk01 # Point de montage du disque export mountpoint=/diskless if [ “$1” == “m” ]; then modprobe rbd rbd feature disable $rbdimage exclusive-lock object-map fast-diff deep-flatten rbd map $rbdimage –id admin –keyring /etc/ceph/ceph.client.admin.keyring mkdir -p $mountpoint mount /dev/rbd/$poolname/$rbdimage $mountpoint fi if [ “$1” == “u” ]; then umount $mountpoint rbd unmap /dev/rbd/$poolname/$rbdimage fi

[cephuser@node-dhcp-pxe bin]$ sudo chmod +x rbd-mount

Création script Service « rbd-mount.service »

[cephuser@node-dhcp-pxe bin]$ cd /etc/systemd/system/

[cephuser@node-dhcp-pxe system]$ sudo vi rbd-mount.service

[Unit]

Description=RADOS block device mapping for $rbdimage in pool $poolname”

Conflicts=shutdown.target

Wants=network-online.target

After=NetworkManager-wait-online.service

[Service]

Type=oneshot

RemainAfterExit=yes

ExecStart=/usr/local/bin/rbd-mount m

ExecStop=/usr/local/bin/rbd-mount u

[Install]

WantedBy=multi-user.target

Start le service « rbd-mount.service »

[cephuser@node-dhcp-pxe system]$ sudo systemctl daemon-reload [cephuser@node-dhcp-pxe system]$ sudo systemctl enable rbd-mount.service [cephuser@node-dhcp-pxe system]$ sudo systemctl start rbd-mount.service

[cephuser@node-dhcp-pxe ~]$ df -h

Nous avons à présent monté un disque CEPH (/diskless) sur notre machine PXE.

Mise en place DISKLESS sur « node-dhcp-pxe » via CEPH

Spécification des machines Linux Diskless

node-dhcp-client0x

- vCPU : 2

- Mémoire : 2 Go

- Disque : Pas de Disque

- Network Realtek RTL8139 : NetBoot PXE

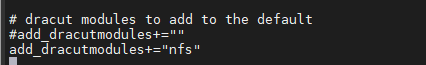

Installation/Configuration Dracut Network (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# yum install -y dracut-network [root@node-dhcp-pxe ~]# vi /etc/dracut.conf add_dracutmodules+="nfs"

Installation/xinetd tftp et tftp-server (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# yum install -y xinetd tftp-server

[root@node-dhcp-pxe ~]# vi /etc/xinetd.d/tftp service tftp { socket_type = dgram protocol = udp wait = yes user = root server = /usr/sbin/in.tftpd server_args = -s /var/lib/tftpboot disable = no per_source = 11 cps = 100 2 flags = IPv4 }

[root@node-dhcp-pxe ~]# firewall-cmd --permanent --zone=public --add-service=tftp [root@node-dhcp-pxe ~]# firewall-cmd --reload [root@node-dhcp-pxe ~]# systemctl restart xinetd

Installation/Configuration NFS (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# yum install -y nfs-utils

[root@node-dhcp-pxe ~]# vi /etc/exports /diskless 172.16.186.0/24(rw,async,no_root_squash)

[root@node-dhcp-pxe ~]# systemctl restart nfs [root@node-dhcp-pxe ~]# exportfs -ra

[root@node-dhcp-pxe ~]# firewall-cmd --permanent --zone=public --add-service=nfs [root@node-dhcp-pxe ~]# firewall-cmd --reload

Installation repository base Centos7 sur la ressource /diskless NFS (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# yum install @Base kernel dracut-network nfs-utils --installroot=/diskless/root --releasever=/

Install/Désinstall paquet sur la ressource /diskless (exemples)

[root@node-dhcp-pxe ~]# yum install nmap --installroot=/diskless/root --releasever=/ [root@node-dhcp-pxe ~]# yum install epel-release --installroot=/diskless/root --releasever=/ [root@node-dhcp-pxe ~]# yum install openssh-server --installroot=/diskless/root --releasever=/

Ex : [root@node-dhcp-pxe ~]# yum erase nmap --installroot=/diskless/root --releasever=/

Transfert VMLINUZ et initramfs sur la ressource /diskless (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# mkdir -p /var/lib/tftpboot/ [root@node-dhcp-pxe ~]# cp /boot/vmlinuz-3.10.0-1160.45.1.el7.x86_64 /var/lib/tftpboot/ [root@node-dhcp-pxe ~]# dracut --add nfs /var/lib/tftpboot/initramfs-3.10.0-1160.45.1.el7.x86_64.img 3.10.0-1160.45.1.el7.x86_64 [root@node-dhcp-pxe ~]# chmod 644 /var/lib/tftpboot/initramfs-3.10.0-1160.45.1.el7.x86_64.img

Menu Démarrage PXE via tftp (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# yum -y install syslinux

[root@node-dhcp-pxe ~]# cp /usr/share/syslinux/pxelinux.0 /var/lib/tftpboot/ [root@node-dhcp-pxe ~]# mkdir -p /var/lib/tftpboot/pxelinux.cfg

[root@node-dhcp-pxe ~]# vi /var/lib/tftpboot/pxelinux.cfg/default default CentOS7 label CentOS7 kernel vmlinuz-3.10.0-1160.45.1.el7.x86_64 append initrd=initramfs-3.10.0-1160.45.1.el7.x86_64.img root=nfs:172.16.186.2:/diskless/root rw

[root@node-dhcp-pxe ~]# vi /etc/dhcp/dhcpd.conf #Ajouter les lignes suivantes next-server 172.16.186.2; filename "pxelinux.0";

[root@node-dhcp-pxe ~]# systemctl restart dhcpd

Création des points de Montage NFS /var pour les clients (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# mkdir -p /diskless/node-dhcp-client01.house.cpb [root@node-dhcp-pxe ~]# mkdir -p /diskless/node-dhcp-client02.house.cpb [root@node-dhcp-pxe ~]# mkdir -p /diskless/node-dhcp-client03.house.cpb [root@node-dhcp-pxe ~]# mkdir -p /diskless/node-dhcp-client04.house.cpb [root@node-dhcp-pxe ~]# mkdir -p /diskless/node-dhcp-client05.house.cpb [root@node-dhcp-pxe ~]# mkdir -p /diskless/node-dhcp-client06.house.cpb

Copie des Structures/Datas de l’arborescence /var (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# cp -a /diskless/root/var /diskless/node-dhcp-client01.house.cpb [root@node-dhcp-pxe ~]# cp -a /diskless/root/var /diskless/node-dhcp-client02.house.cpb [root@node-dhcp-pxe ~]# cp -a /diskless/root/var /diskless/node-dhcp-client03.house.cpb [root@node-dhcp-pxe ~]# cp -a /diskless/root/var /diskless/node-dhcp-client04.house.cpb [root@node-dhcp-pxe ~]# cp -a /diskless/root/var /diskless/node-dhcp-client05.house.cpb [root@node-dhcp-pxe ~]# cp -a /diskless/root/var /diskless/node-dhcp-client06.house.cpb

Script pour le montage NFS /var pour chaque Client (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# vi /diskless/root/etc/rc.local #!/bin/bash # THIS FILE IS ADDED FOR COMPATIBILITY PURPOSES # # It is highly advisable to create own systemd services or udev rules # to run scripts during boot instead of using this file. # # In contrast to previous versions due to parallel execution during boot # this script will NOT be run after all other services. # # Please note that you must run 'chmod +x /etc/rc.d/rc.local' to ensure # that this script will be executed during boot. touch /var/lock/subsys/local for DIR in var do mount -o rw 172.16.186.2:/diskless/$HOSTNAME/$DIR /$DIR done mount /dev/sda /tmp if [ $? != 0 ]; then mkfs.xfs /dev/sda mount /dev/sda /tmp fi dhclient &

Activation du Script au démarrage des Machines Clientes (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# chroot /diskless/root/ bash-4.2# chmod +x /etc/rc.d/rc.local bash-4.2# systemctl enable rc-local bash-4.2# exit

Activation service ssh (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# chroot /diskless/root/ bash-4.2# systemctl enable sshd bash-4.2# systemctl start sshd bash-4.2# exit

Définir un password pour le super Admin « Root » (node-dhcp-pxe)

[root@node-dhcpserver ~]# chroot /diskless/root/ bash-4.2#passwd root password : retype password:

Fichier /etc/hosts (node-dhcp-pxe)

[root@node-dhcp-pxe ~]# scp /etc/hosts /diskless/root/etc/hosts

Démarrage/Check des Clients Diskless

Démarrer tous les clients

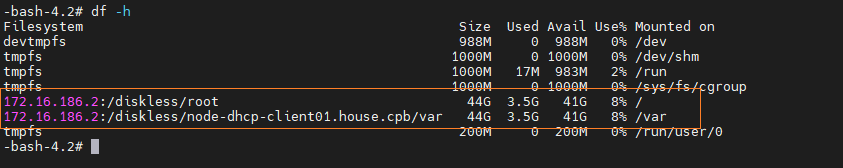

Client01 : node-dhcp-client01

[root@node-dhcp-pxe ~]# tail -f /var/log/messages

[root@node-dhcp-pxe ~]# ssh -l root 172.16.186.10 -bash-4.2# df -h

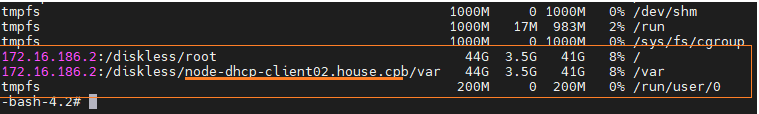

Client02: node-dhcp-client02

[root@node-dhcp-pxe ~]# tail -f /var/log/messages

[root@node-dhcp-pxe ~]# ssh -l root 172.16.186.11 -bash-4.2# df -h

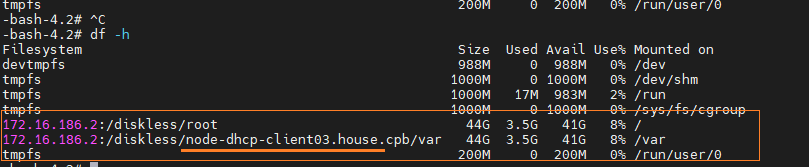

Client03: node-dhcp-client03

[root@node-dhcp-pxe ~]# tail -f /var/log/messages

[root@node-dhcp-pxe ~]# ssh -l root 172.16.186.12 -bash-4.2# df -h

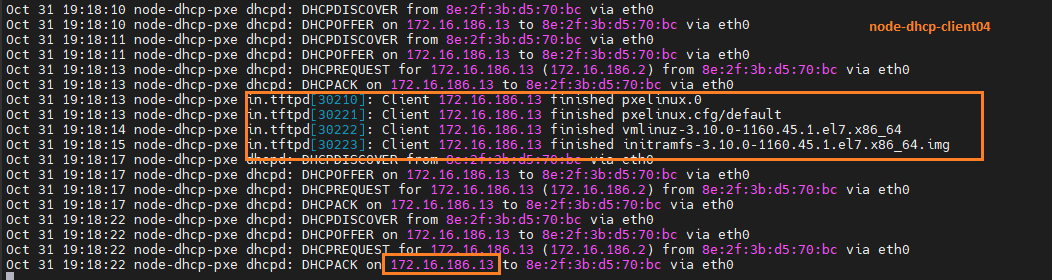

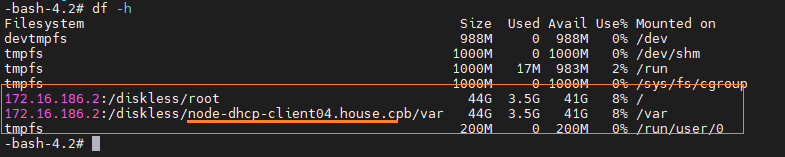

Client04 : node-dhcp-client04

[root@node-dhcp-pxe ~]# tail -f /var/log/messages

[root@node-dhcp-pxe ~]# ssh -l root 172.16.186.13 -bash-4.2# df -h

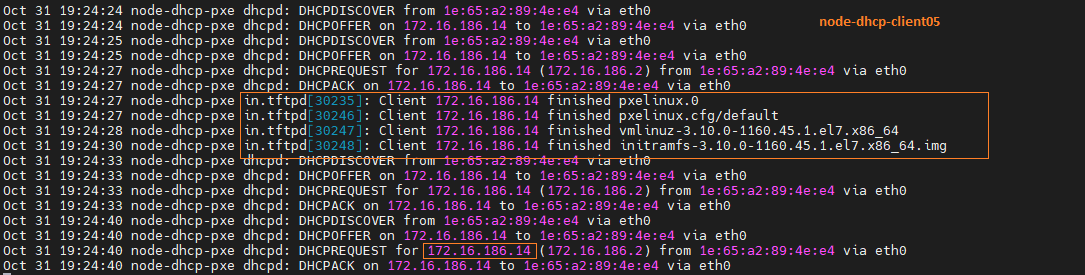

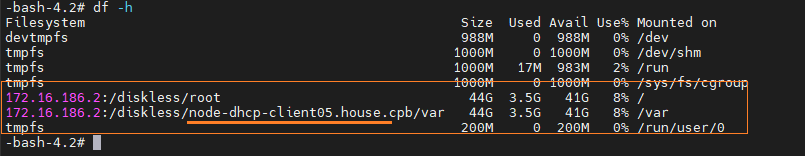

Client05: node-dhcp-client05

[root@node-dhcp-pxe ~]# tail -f /var/log/messages

[root@node-dhcp-pxe ~]# ssh -l root 172.16.186.14 -bash-4.2# df -h

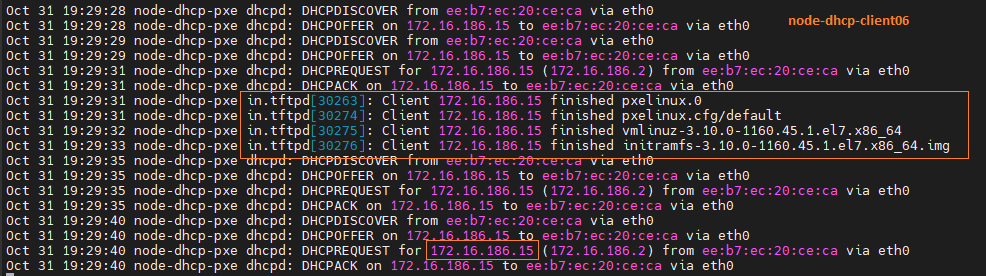

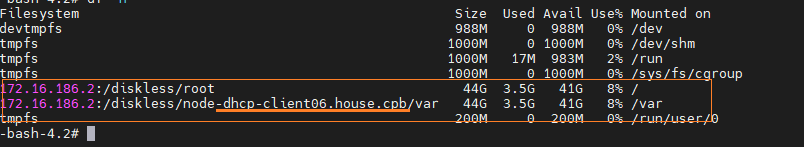

Client06 : node-dhcp-client06

[root@node-dhcp-pxe ~]# tail -f /var/log/messages

[root@node-dhcp-pxe ~]# ssh -l root 172.16.186.15 -bash-4.2# df -h

Views: 54