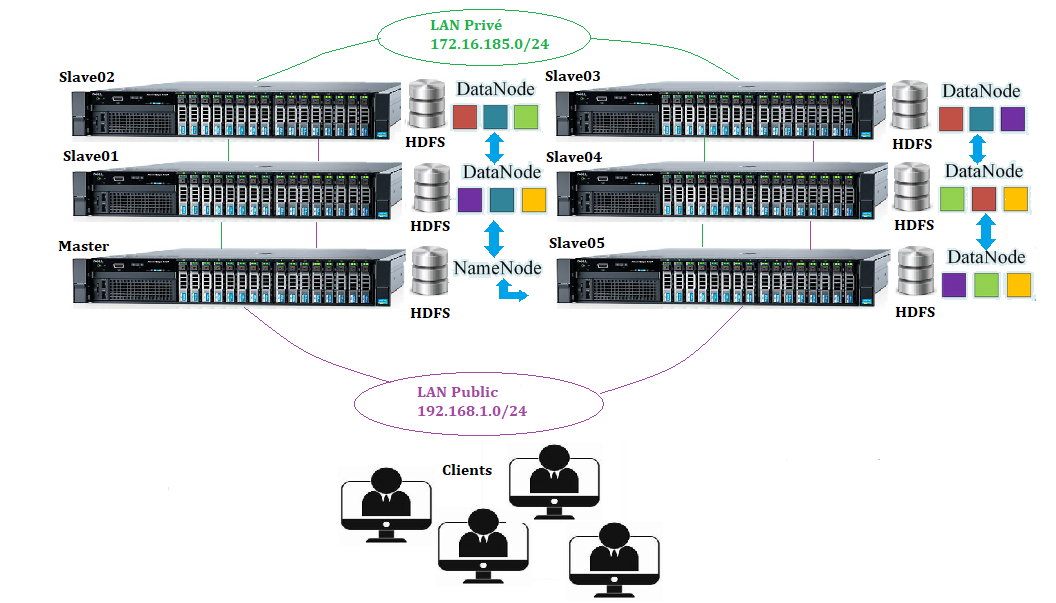

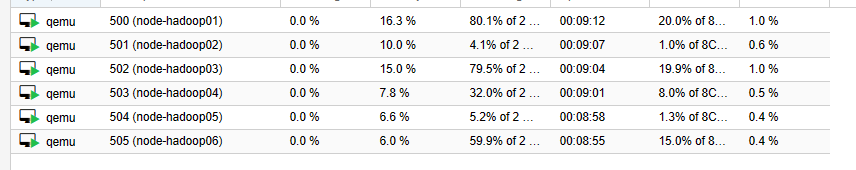

Inventaires des machines

Node-hadoop01 (NameNode)

- Lan Public : 192.168.1.50/24

- Lan Privé : 172.16.185.50/24

Node-hadoop02 (DataNode01)

- Lan Public : 192.168.1.51/24

- Lan Privé : 172.16.185.51/24

Node-hadoop03 (DataNode 02)

- Lan Public : 192.168.1.52/24

- Lan Privé : 172.16.185.52/24

Node-hadoop04 (DataNode 03)

- Lan Public : 192.168.1.53/24

- Lan Privé : 172.16.185.53/24

Node-hadoop05 (DataNode 04)

- Lan Public : 192.168.1.54/24

- Lan Privé : 172.16.185.54/24

Node-hadoop06 (DataNode 05)

- Lan Public : 192.168.1.55/24

- Lan Privé : 172.16.185.55/24

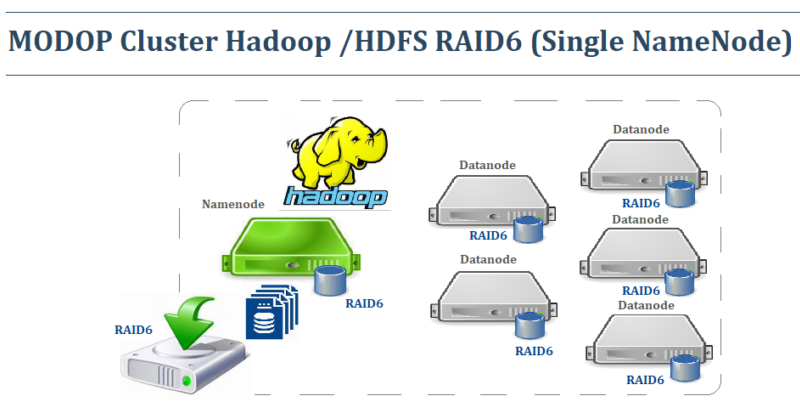

Prérequis des nœuds Hadoop

Mise à jour

[root@node-hadoop0x ~]# dnf update -y

Désactivation SELinux

[root@node-hadoop0x ~]# setenforce 0 [root@node-hadoop0x ~]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

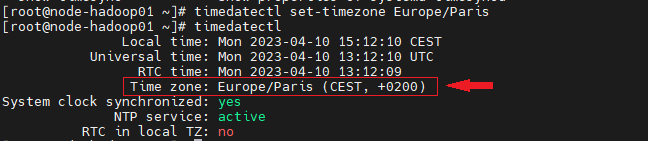

Set Timedate Paris

[root@node-hadoop0x ~]# timedatectl set-timezone Europe/Paris

Hosts du Cluster Privé et Public (no DNS)

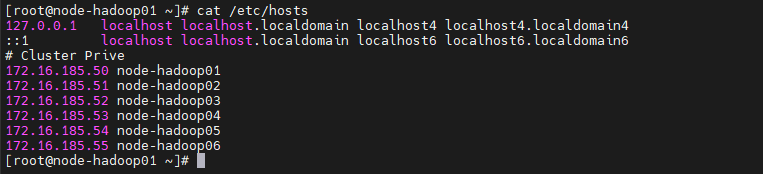

Hosts Lan Privé (172.16.185.0/24)

[root@node-hadoop0x ~]# echo "# Cluster Prive" >> /etc/hosts [root@node-hadoop0x ~]# for i in {0..5};do echo "172.16.185.5$i node-hadoop0`expr $i + 1`" >> /etc/hosts ;done

[root@node-hadoop0x ~]# cat /etc/hosts

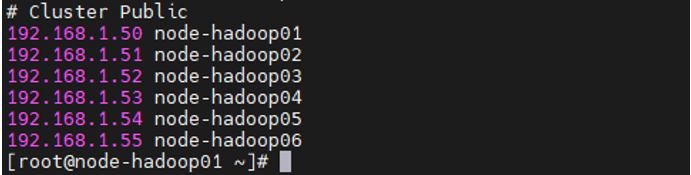

Hosts Lan Public(192.168.1.0/24)

[root@node-hadoop0x ~]# echo "# Cluster Public" >> /etc/hosts [root@node-hadoop0x ~]# for i in {0..5};do echo "192.168.1.5$i hadoop0`expr $i + 1`" >> /etc/hosts ;done

[root@node-hadoop0x ~]# cat /etc/hosts

Stopper le firewalld (tous les nœuds)

[root@node-hadoop0x ~]# systemctl stop firewalld

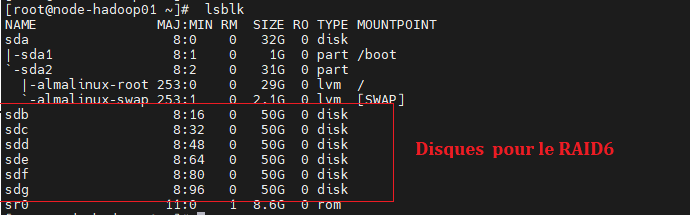

Création HDFS RAID6 (tous les nœuds)

Inventaire des disques

[root@node-hadoop0x ~]# lsblk

Installation Raid Logiciel

[root@node-hadoop0x ~]# dnf -y install mdadm [root@node-hadoop0x ~]# echo "modprobe raid6" >> /etc/rc.local [root@node-hadoop0x ~]# chmod +x /etc/rc.local [root@node-hadoop0x ~]# source /etc/rc.local

[root@node-hadoop01 ~]# cat /proc/mdstat

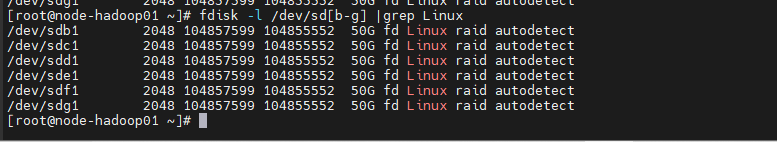

Préparation des disques

[root@node-hadoop0x ~]# for disk in sdb sdc sdd sde sdf sdg;do parted -s /dev/$disk mklabel msdos ; done [root@node-hadoop0x ~]# for disk in sdb sdc sdd sde sdf sdg;do parted -s /dev/$disk mkpart primary 1MiB 100%; done [root@node-hadoop0x ~]# for disk in sdb sdc sdd sde sdf sdg;do parted -s /dev/$disk set 1 raid on; done

[root@node-hadoop0x ~]# fdisk -l /dev/sd[b-g] |grep Linux

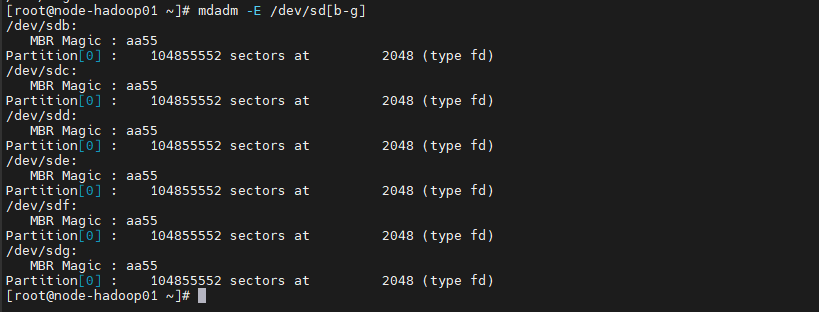

[root@node-hadoop0x ~]# mdadm -E /dev/sd[b-g]

Création du RAID6

[root@node-hadoop0x ~]# mdadm --create /dev/md0 --level=6 --raid-devices=6 /dev/sd[b-g]1

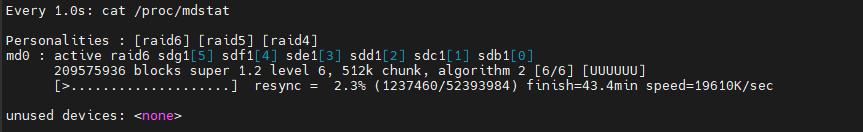

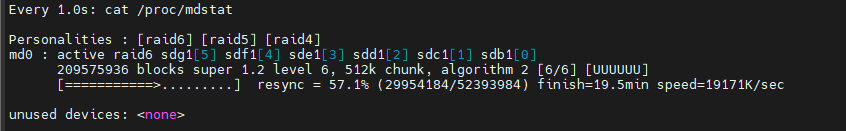

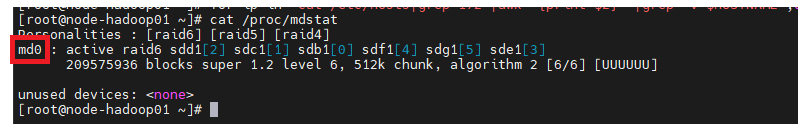

[root@node-hadoop0x ~]# watch -n1 cat /proc/mdstat

Début construction du RAID

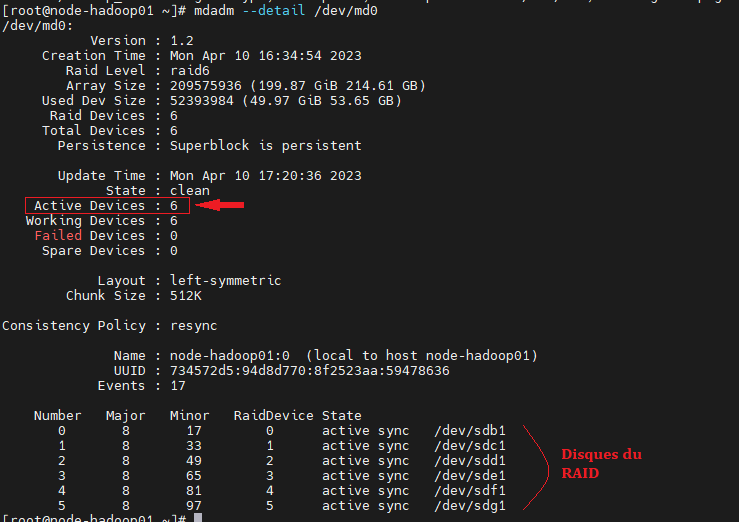

[root@node-hadoop0x ~]# mdadm --detail /dev/md0

[root@node-hadoop0x ~]# mkfs.xfs -f /dev/md0

Comptes et Structures (tous les nœuds)

Création point de montage RAID /md0

[root@node-hadoop0x ~]# mkdir /hadoop_dir [root@node-hadoop0x ~]# echo "/dev/md0 /hadoop_dir xfs defaults 0 0" >> /etc/fstab [root@node-hadoop0x ~]# mount /hadoop_dir

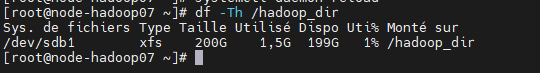

[root@node-hadoop0x ~]# df -Th /hadoop_dir

Création User/group hadoop

[root@node-hadoop0x ~]# groupadd hadoop [root@node-hadoop0x ~]# useradd hduser [root@node-hadoop0x ~]# passwd hduser [root@node-hadoop0x ~]# usermod -G hadoop hduser

Création structures HDFS sur le RAID

Pour le NameNodes

[root@node-hadoop0x ~]# mkdir /hadoop_dir/hdfs [root@node-hadoop0x ~]# mkdir /hadoop_dir/hdfs/namenode

Pour les DataNodes

[root@node-hadoop01 ~]# mkdir /hadoop_dir/hdfs/datanode (Optionnel) [root@node-hadoop02 ~]# mkdir /hadoop_dir/hdfs/datanode [root@node-hadoop03 ~]# mkdir /hadoop_dir/hdfs/datanode [root@node-hadoop04 ~]# mkdir /hadoop_dir/hdfs/datanode [root@node-hadoop05 ~]# mkdir /hadoop_dir/hdfs/datanode [root@node-hadoop06 ~]# mkdir /hadoop_dir/hdfs/datanode

Ajustement des droits

[root@node-hadoop0x ~]# chown hduser:hadoop -R /hadoop_dir

Download Hadoop et Java (tous les nœuds)

Paquet JDK java

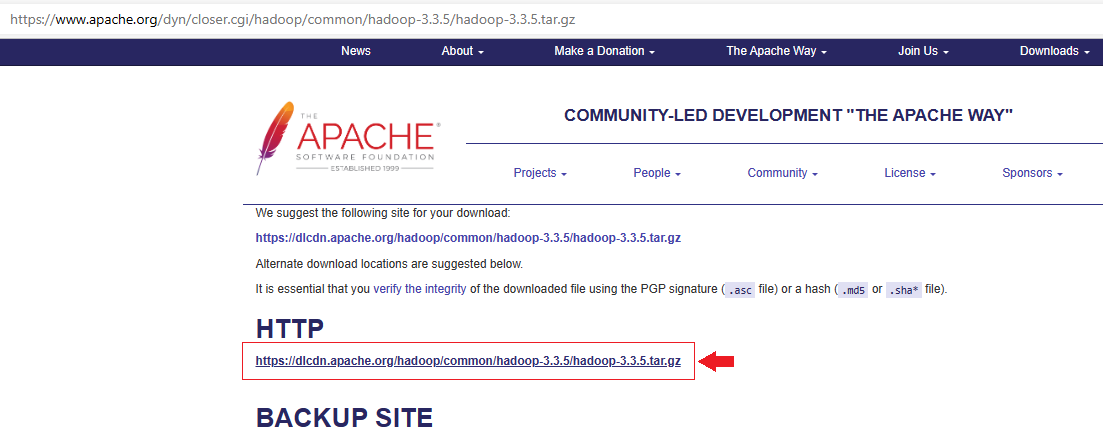

Paquet hadoop

[root@node-hadoop0x hduser]# dnf install wget tar sshpass vim -y [root@node-hadoop0x hduser]# wget https://dlcdn.apache.org/hadoop/common/hadoop-3.3.5/hadoop-3.3.5.tar.gz [root@node-hadoop0x hduser]# chown hduser:hadoop *

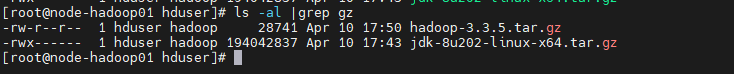

[root@node-hadoop0x hduser]# ls -al |grep gz

Installation des paquets (tous les nœuds)

Paquet JDK java

Installation JDK

[root@node-hadoop0x hduser]# tar -xvzf jdk-8u202-linux-x64.tar.gz -C /opt

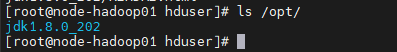

[root@node-hadoop0x hduser]# ls /opt/

Ajout Variable d’environnement

[root@node-hadoop0x hduser]# su - hduser

[hduser@node-hadoop0x hduser]# vi ~/.bashrc

#export JAVA_HOME=/opt/jdk1.8.0_202 export JAVA_HOME=$(readlink -f /usr/bin/java | sed "s:bin/java::") export PATH=$JAVA_HOME/bin:$PATH

[hduser@node-hadoop0x hduser]# source ~/.bashrc

Paquet hadoop

Installation hadoop

[root@node-hadoop0x hduser]# tar -xzvf hadoop-3.3.5.tar.gz -C /opt/ [root@node-hadoop0x hduser]# mv /opt/hadoop-3.3.5/ /opt/hadoop/ [root@node-hadoop0x hduser]# chown -R hduser:hadoop /opt/hadoop/

Ajout Variable d’environnement

[root@node-hadoop0x hduser]# su - hduser

[hduser@node-hadoop0x hduser]# vi ~/.bashrc

export PATH=${PATH}:/opt/jdk1.8.0_202/bin #export JAVA_HOME=/opt/jdk1.8.0_202 #export PATH=$JAVA_HOME/bin:$PATH export JAVA_HOME=$(readlink -f /opt/jdk1.8.0_202/bin/java | sed "s:/bin/java::") export HADOOP_HOME=/opt/hadoop export HADOOP_INSTALL=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export PATH=$PATH:$HADOOP_INSTALL/bin export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native #export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib" export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native" xport PATH=/opt/jdk1.8.0_202/bin:$PATH

[hduser@node-hadoop0x hduser]# source ~/.bashrc

Ajout logs hadoop

[hduser@node-hadoop0x ~]$ mkdir /opt/hadoop/logs [hduser@node-hadoop01 ~]$ chown -R hduser.hadoop /opt/hadoop/logs

Créer cléf SSH chaque nodes (tous les nœuds)

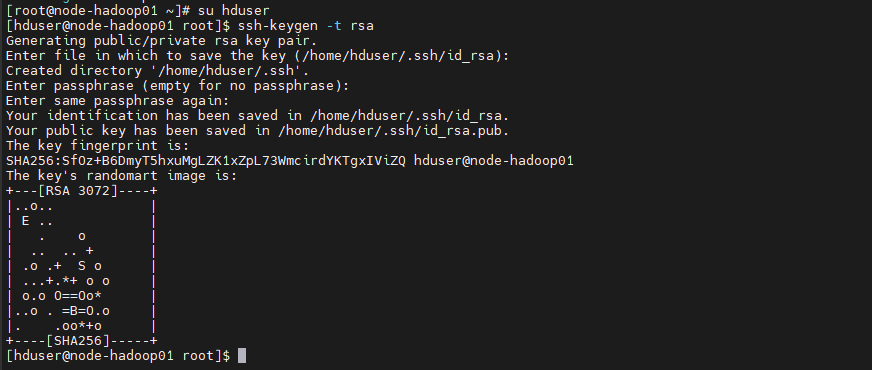

node-hadoop01

[hduser@node-hadoop01 ~]# ssh-keygen -t rsa

[hduser@node-hadoop01 ~]# echo « Mot_passe_hduser » > /home/hduser/.hduser [hduser@node-hadoop01 ~]$ chmod 600 /home/hduser/.hduser

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |awk '{print $2}'`;do sshpass -f /home/hduser/.hduser ssh-copy-id hduser@${ssh}; done

node-hadoop02

[hduser@node-hadoop02 ~]# ssh-keygen -t rsa [hduser@node-hadoop02 ~]# echo « Mot_passe_hduser » > /home/hduser/.hduser [hduser@node-hadoop02 ~]$ chmod 600 /home/hduser/.hduser

[hduser@node-hadoop02 ~]$ for ssh in `cat /etc/hosts |grep node |awk '{print $2}'`;do sshpass -f /home/hduser/.hduser ssh-copy-id -o StrictHostKeyChecking=no hduser@${ssh}; done

node-hadoop03

[hduser@node-hadoop03 ~]# ssh-keygen -t rsa [hduser@node-hadoop03 ~]# echo « Mot_passe_hduser » > /home/hduser/.hduser [hduser@node-hadoop03 ~]$ chmod 600 /home/hduser/.hduser

[hduser@node-hadoop03 ~]$ for ssh in `cat /etc/hosts |grep node |awk '{print $2}'`;do sshpass -f /home/hduser/.hduser ssh-copy-id -o StrictHostKeyChecking=no hduser@${ssh}; done

node-hadoop04

[hduser@node-hadoop04 ~]# ssh-keygen -t rsa [hduser@node-hadoop04 ~]# echo « Mot_passe_hduser » > /home/hduser/.hduser [hduser@node-hadoop04 ~]$ chmod 600 /home/hduser/.hduser

[hduser@node-hadoop04 ~]$ for ssh in `cat /etc/hosts |grep node |awk '{print $2}'`;do sshpass -f /home/hduser/.hduser ssh-copy-id -o StrictHostKeyChecking=no hduser@${ssh}; done

node-hadoop05

[hduser@node-hadoop05 ~]# ssh-keygen -t rsa [hduser@node-hadoop05 ~]# echo « Mot_passe_hduser » > /home/hduser/.hduser [hduser@node-hadoop05 ~]$ chmod 600 /home/hduser/.hduser

[hduser@node-hadoop05 ~]$ for ssh in `cat /etc/hosts |grep node |awk '{print $2}'`;do sshpass -f /home/hduser/.hduser ssh-copy-id -o StrictHostKeyChecking=no hduser@${ssh}; done

node-hadoop06

[hduser@node-hadoop06 ~]# ssh-keygen -t rsa [hduser@node-hadoop06 ~]# echo « Mot_passe_hduser » > /home/hduser/.hduser [hduser@node-hadoop06 ~]$ chmod 600 /home/hduser/.hduser

[hduser@node-hadoop06 ~]$ for ssh in `cat /etc/hosts |grep node |awk '{print $2}'`;do sshpass -f /home/hduser/.hduser ssh-copy-id -o StrictHostKeyChecking=no hduser@${ssh}; done

Check clefs (node01)

[hduser@node-hadoop06 ~]$ ssh hduser@node-hadoop01 [hduser@node-hadoop01 ~]$ cat .ssh/authorized_keys

Configuration Hadoop (tous les nœuds)

Set JAVAHOME

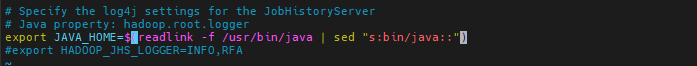

Fichier conf mapred-env.sh (master)

[hduser@node-hadoop01 ~]$ vim /opt/hadoop/etc/hadoop/mapred-env.sh

export JAVA_HOME=$(readlink -f /usr/bin/java | sed « s:bin/java:: »)

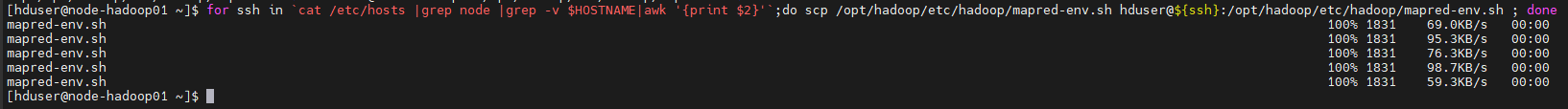

Copie fichier mapred-env.sh (sur les nodes DataNode)

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |grep -v $HOSTNAME|awk '{print $2}'`;do scp /opt/hadoop/etc/hadoop/mapred-env.sh hduser@${ssh}:/opt/hadoop/etc/hadoop/mapred-env.sh ; done

Configuration Hadoop site

Fichier core-site.xml

Fichier core-site.xml (master)

[hduser@node-hadoop01 ~]$ vi /opt/hadoop/etc/hadoop/core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://node-hadoop01:50000</value> </property> </configuration>

Déploiement du fichier core-site.xml (DataNodes)

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |grep -v $HOSTNAME|awk '{print $2}'`;do scp /opt/hadoop/etc/hadoop/core-site.xml hduser@${ssh}:/opt/hadoop/etc/hadoop/core-site.xml ; done

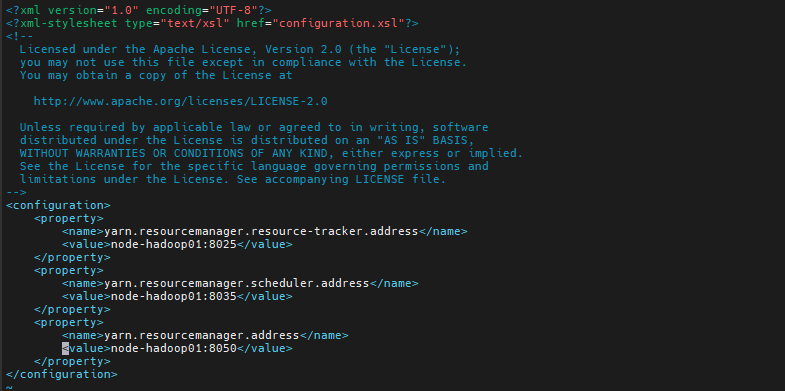

Fichier yarn-site.xml

Fichier yarn-site.xml(master)

[hduser@node-hadoop01 ~]$ vim /opt/hadoop/etc/hadoop/yarn-site.xml

<configuration> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>node-hadoop01:8025</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>node-hadoop01:8035</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>node-hadoop01:8050</value> </property> </configuration>

Déploiement du fichier yarn-site.xml (DataNodes)

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |grep -v $HOSTNAME|awk '{print $2}'`;do scp /opt/hadoop/etc/hadoop/yarn-site.xml hduser@${ssh}:/opt/hadoop/etc/hadoop/yarn-site.xml ; done

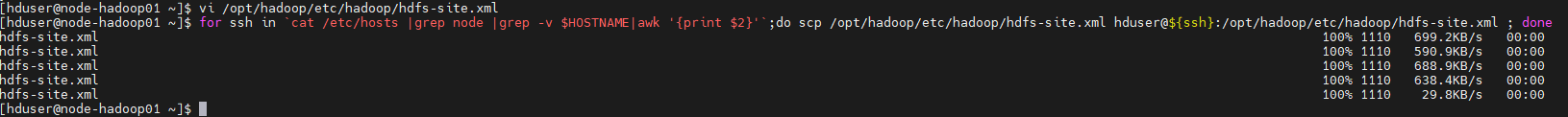

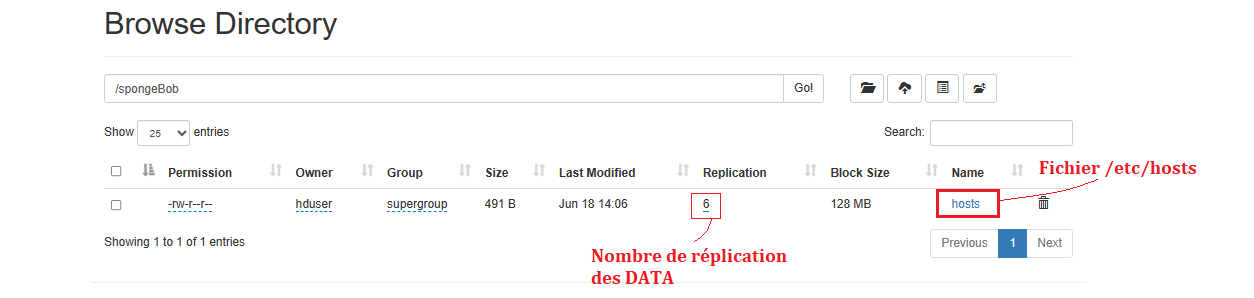

Configuration HDFS (File distribué mode block)

Fichier hdfs-site.xml

Fichier hdfs-site.xml (master)

[hduser@node-hadoop01 ~]$ vi /opt/hadoop/etc/hadoop/hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>6</value> </property> <property> <name>dfs.data.dir</name> <value>file:///hadoop_dir/hdfs/datanode</value> </property> <property> <name>dfs.name.dir</name> <value>file:///hadoop_dir/hdfs/namenode</value> </property> </configuration>

Déploiement Fichier hdfs-site.xml (slave0x)

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |grep -v $HOSTNAME|awk '{print $2}'`;do scp /opt/hadoop/etc/hadoop/hdfs-site.xml hduser@${ssh}:/opt/hadoop/etc/hadoop/hdfs-site.xml ; done

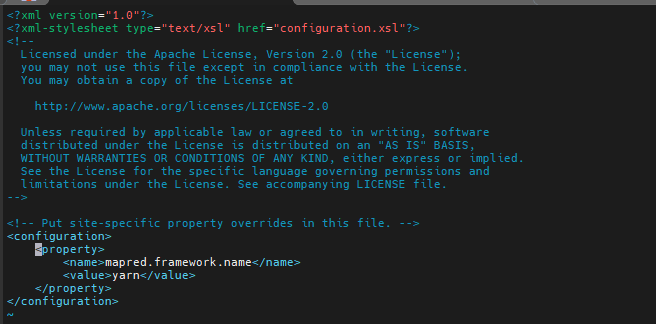

Configuration mapred

Fichier mapred-site.xml

Fichier mapred-site.xml(Master)

[hduser@node-hadoop01 ~]$ vim /opt/hadoop/etc/hadoop/mapred-site.xml

<configuration> <property> <name>mapred.framework.name</name> <value>yarn</value> </property> </configuration>

Déploiement du fichier mapred-site.xml(Slave0x)

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |grep -v $HOSTNAME|awk '{print $2}'`;do scp /opt/hadoop/etc/hadoop/mapred-site.xml hduser@${ssh}:/opt/hadoop/etc/hadoop/mapred-site.xml ; done

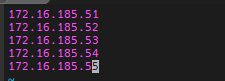

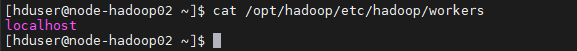

Configuration fichier « slaves »

[hduser@node-hadoop01 ~]$ vi /opt/hadoop/etc/hadoop/workers

172.16.185.50 (Si utilisé aussi en DATANODE) 172.16.185.51 172.16.185.52 172.16.185.53 172.16.185.54 172.16.185.55

Fichiers slaves (Master)

Adresse IP privé des nodes slaves

Fichiers slaves (Datanodes)

[hduser@node-hadoop0x ~]$ cat /opt/hadoop/etc/hadoop/workers

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |grep -v $HOSTNAME|awk '{print $2}'`;do ssh hduser@$ssh -t "cat /opt/hadoop/etc/hadoop/workers" ; done

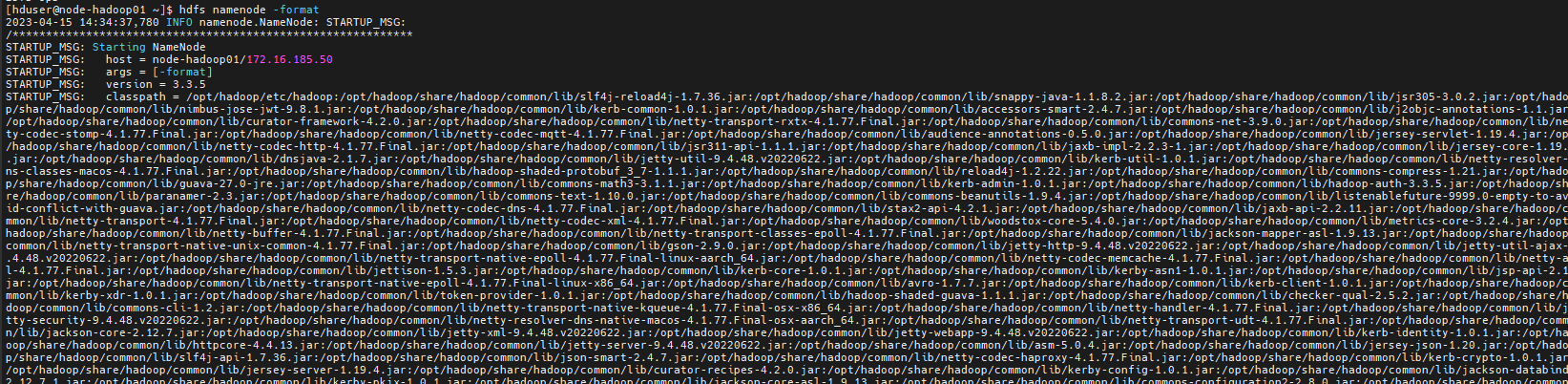

Activation du Cluster Hadoop et HDFS

Formatage du cluster (master)

[hduser@node-hadoop01 ~]$ hdfs namenode -format

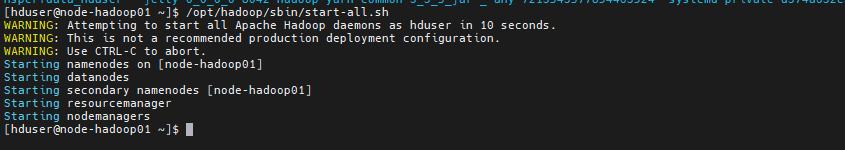

Stop le Cluster (master)

[hduser@node-hadoop01 ~]$ /opt/hadoop/sbin/stop-all.sh

Start le Cluster (master)

[hduser@node-hadoop01 ~]$ /opt/hadoop/sbin/start-all.sh

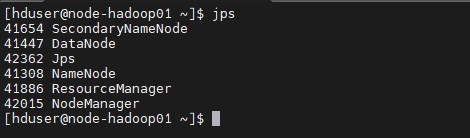

Statuts des services du Cluster

Statuts master

[hduser@node-hadoop01 ~]$ jps

Statuts slave

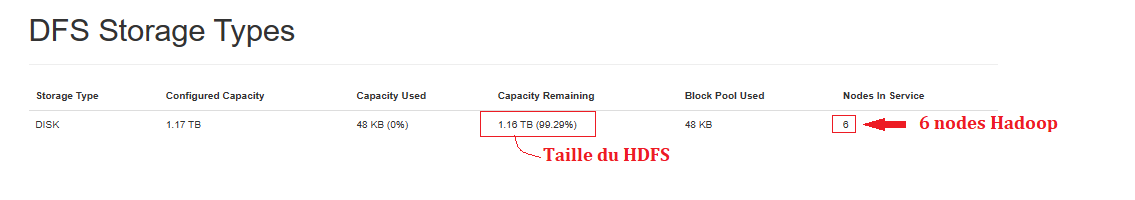

Taille HDFS

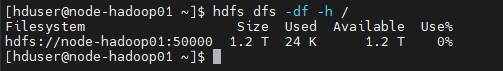

Sur le master

[hduser@node-hadoop01 ~]$ hdfs dfs -df -h /

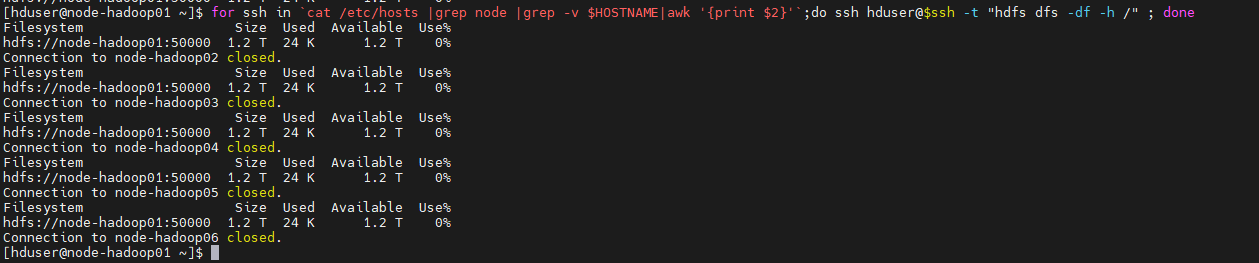

Sur les DataNodes

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |grep -v $HOSTNAME|awk '{print $2}'`;do ssh hduser@$ssh -t "hdfs dfs -df -h /" ; done

Donc on a bien l’agrégation des 6 Nodes de 200Go (RAID6) en HDFS soit 6x200Go = 1,2To

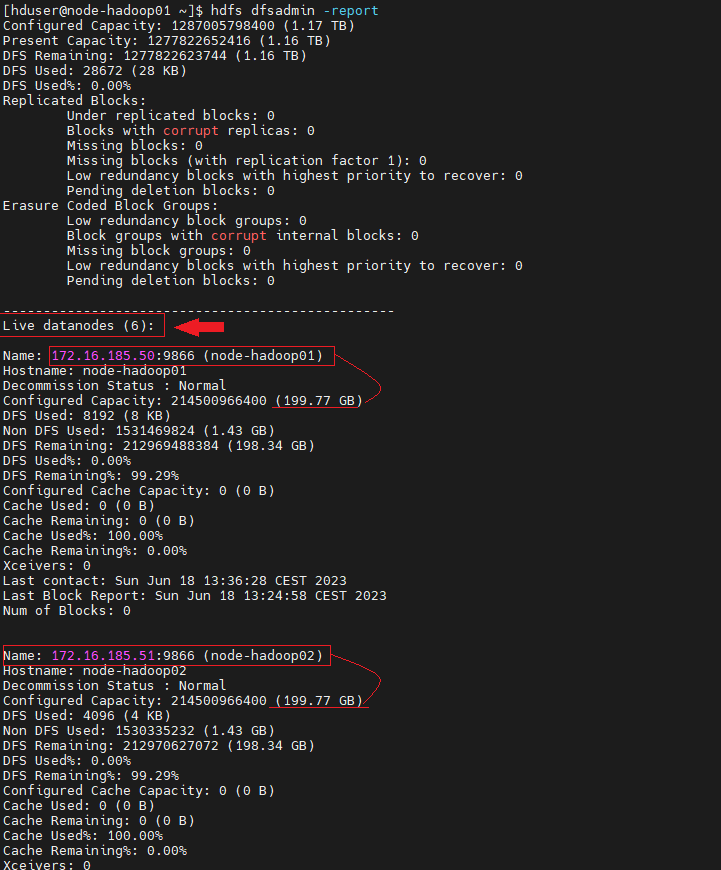

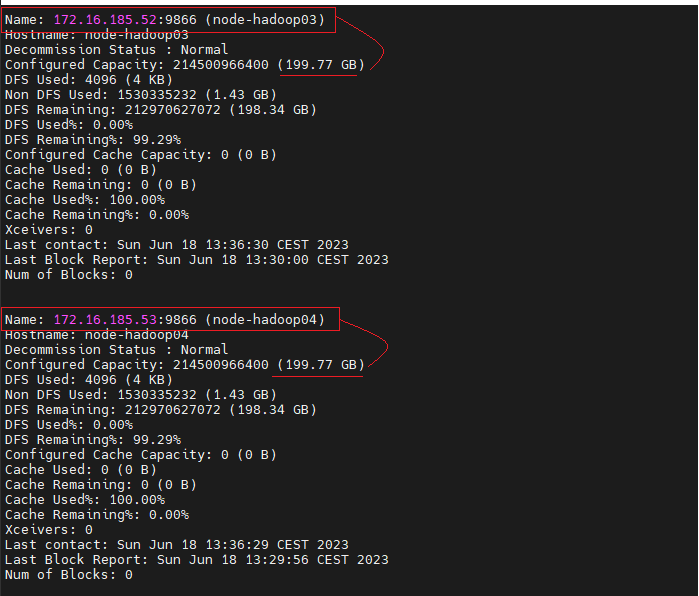

Statuts HDFS

[hduser@node-hadoop01 sbin]$ hdfs dfsadmin –report

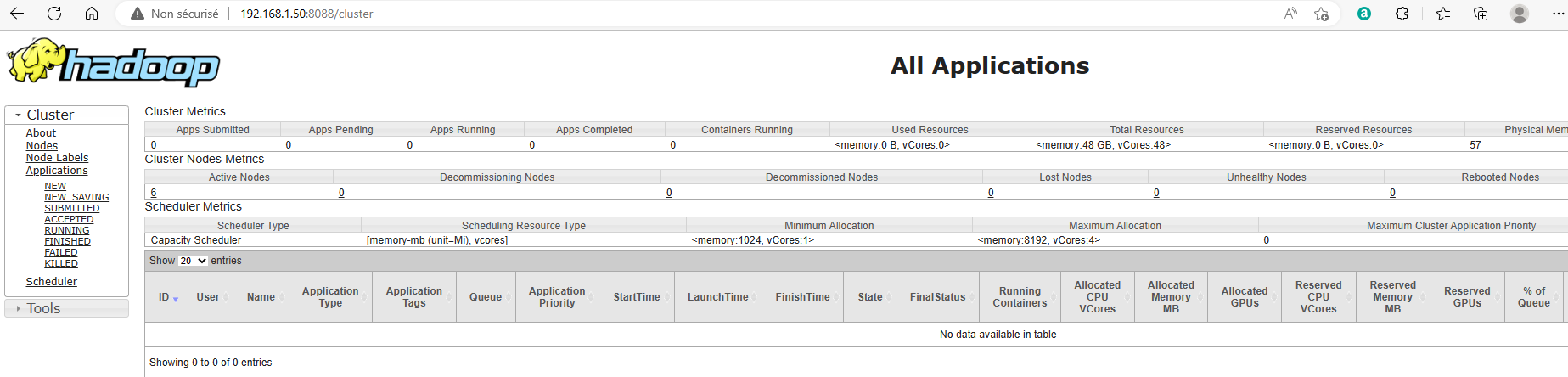

Vue de l’interface Web UI (Ressource Manager )

- http://IP_Publique_Master:8088

« Nodes » du cluster

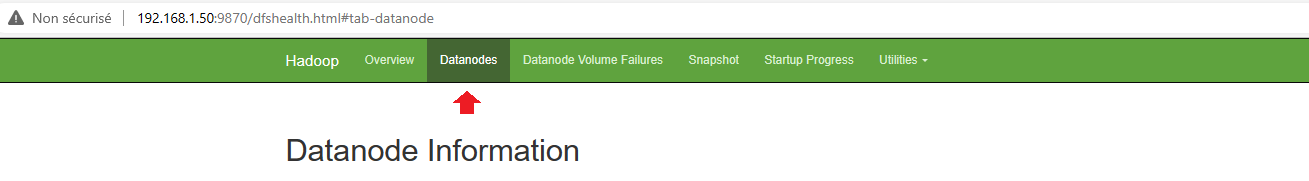

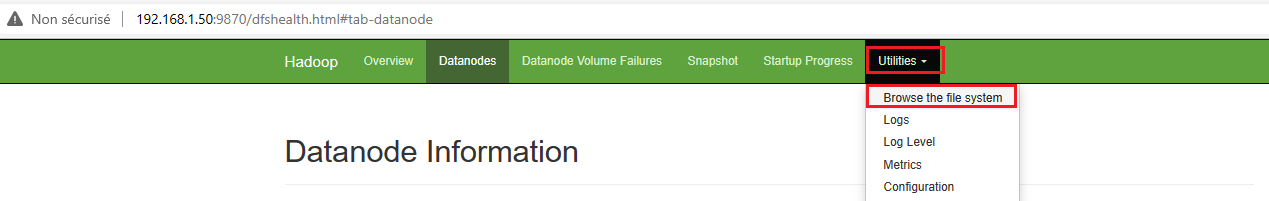

Vue de l’interface Web UI HADOOP

Overview

« Summary »

« Taille du FS »

Vue des nodes HDFS (datanodes)

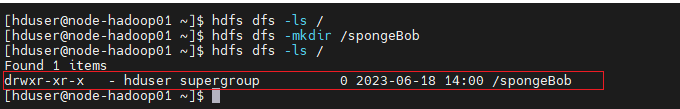

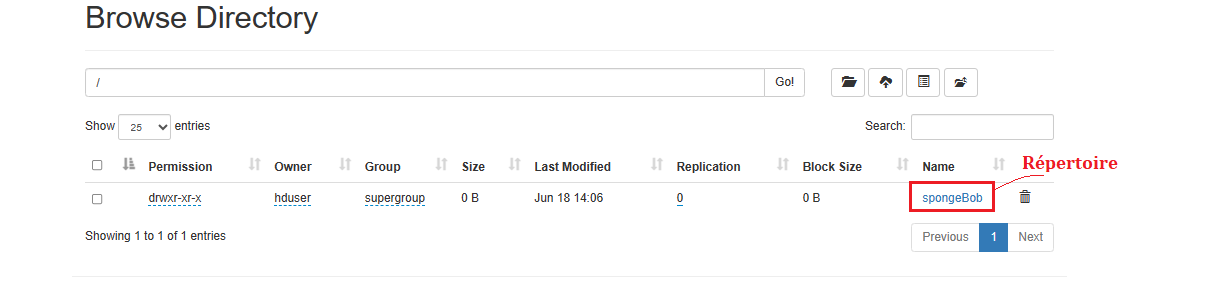

Test réplication HDFS

Sur node-hadoop01 – Création répertoire

[hduser@node-hadoop01 sbin]$ hdfs dfs -ls / [hduser@node-hadoop01 sbin]$ hdfs dfs -mkdir /spongeBob [hduser@node-hadoop01 sbin]$ hdfs dfs -ls /

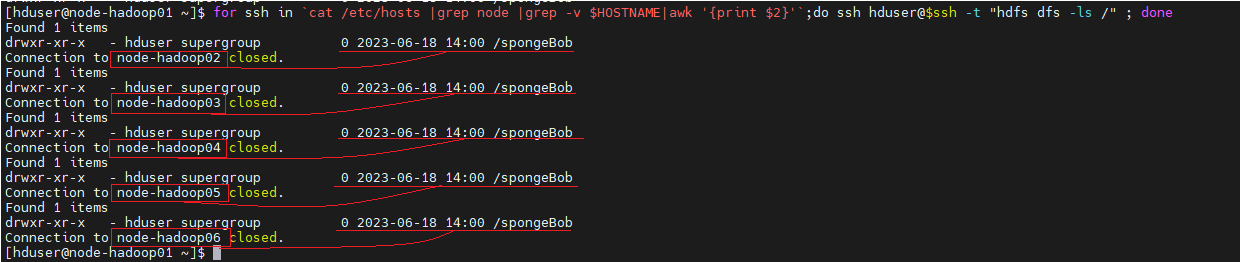

Sur node-hadoop02 à 06

[hduser@node-hadoop01 ~]$ for ssh in `cat /etc/hosts |grep node |grep -v $HOSTNAME|awk '{print $2}'`;do ssh hduser@$ssh -t "hdfs dfs -ls /" ; done

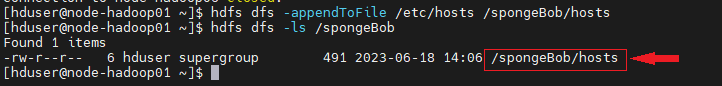

Sur node-hadoop01 – Copie d’un fichier

[hduser@node-hadoop01 ~]$ hdfs dfs -appendToFile /etc/hosts /spongeBob/hosts [hduser@node-hadoop01 ~]$ hdfs dfs -ls /spongeBob

Vue de la réplication (Répertoire/Files)

Views: 18