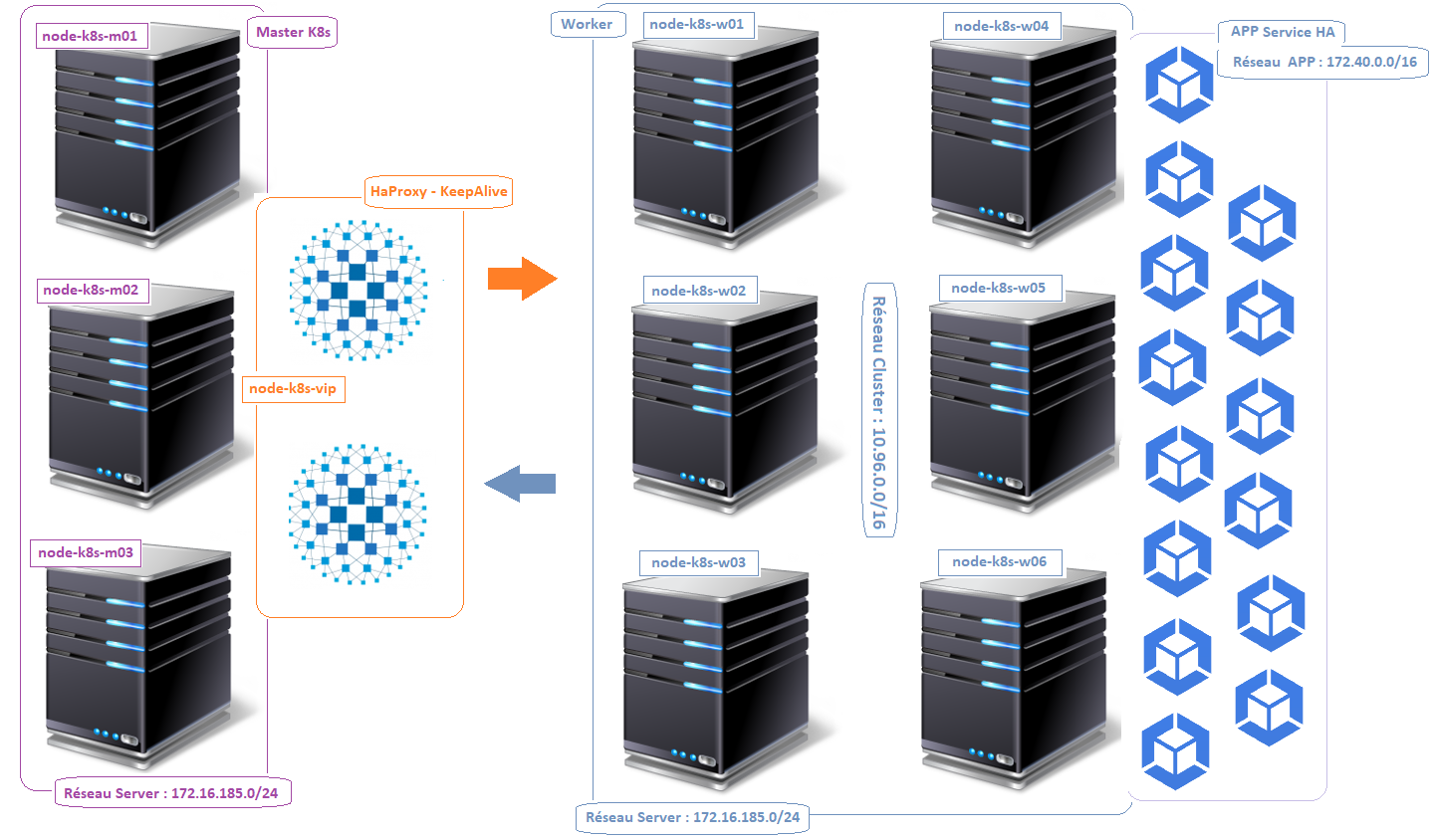

Le principe

Les machines de l’infrastructure K8S

Node Master

- node-K8s-vip : 172.16.185.30

- node-K8s-vip : 172.16.185.40

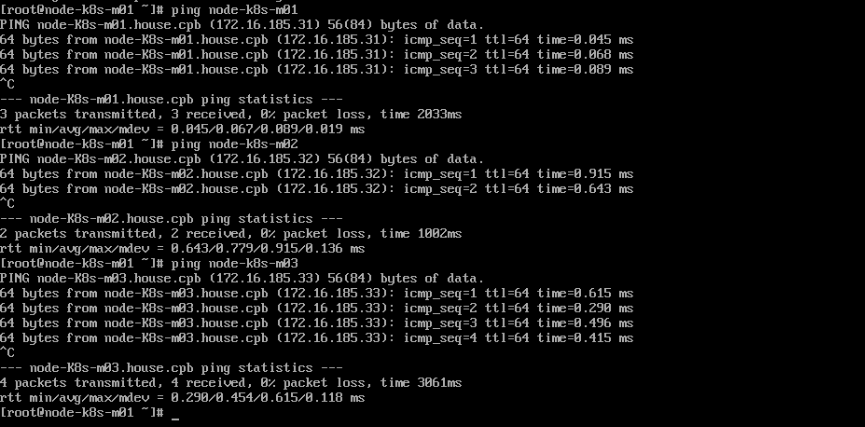

- node-k8s-m01 : 172.16.185.31

- node-k8s-m02 : 172.16.185.32

- node-k8s-m03 : 172.16.185.33

Node Worker

- node-k8s-w01 : 172.16.185.34

- node-k8s-w02 : 172.16.185.35

- node-k8s-w03 : 172.16.185.36

- node-k8s-w04 : 172.16.185.37

- node-k8s-w05 : 172.16.185.38

- node-k8s-w06 : 172.16.185.39

Client

- node-k8s-c01 : 172.16.185.41

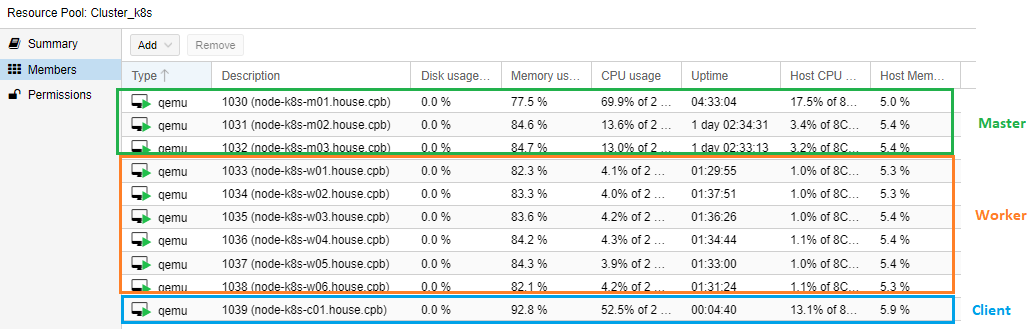

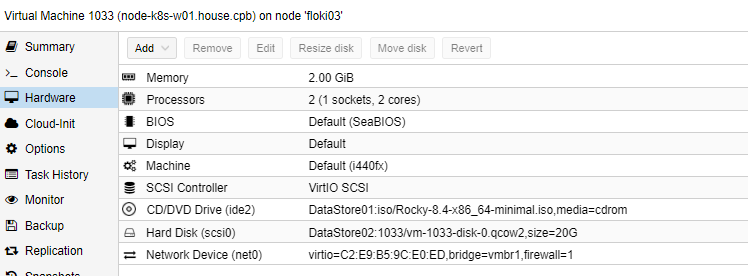

Spécification des machines

- Système OS : RockyLinux 8

- Mémoire : 2Go RAM

- vCPU : 2

- Disk : 20Go

- vSwitch : vmbr1 (172.16.185.0/24)

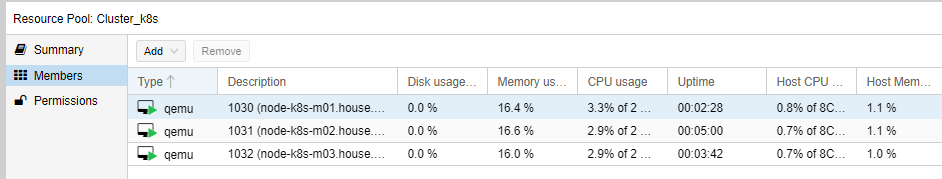

Le Pool de machine

Intégrer les machines de l’infrastructure sur vos DNS

[root@dns-pri ~]# vi /var/named/forward.house.cpb

Ajouter à votre zone SOA du DNS

; Infrastructure k8s ; LoadBalancing KeepAlive k8s node-K8s-vip IN A 172.16.185.30 node-K8s-vip IN A 172.16.185.40 ; K8s Master node-K8s-m01 IN A 172.16.185.31 node-K8s-m02 IN A 172.16.185.32 node-K8s-m03 IN A 172.16.185.33 ; K8s Worker node-K8s-w01 IN A 172.16.185.34 node-K8s-w02 IN A 172.16.185.35 node-K8s-w03 IN A 172.16.185.36 node-K8s-w04 IN A 172.16.185.37 node-K8s-w05 IN A 172.16.185.38 node-K8s-w06 IN A 172.16.185.39

[root@dns-pri ~]# vi /var/named/reverse.house.cpb

Ajouter à votre zone SOA du DNS Reverse

; Infra K8s ; LoadBalancing KeepAlive 30 IN PTR node-K8s-vip.house.cpb. 40 IN PTR node-K8s-vip.house.cpb. ; K8s Master 31 IN PTR node-K8s-m01.house.cpb. 32 IN PTR node-K8s-m02.house.cpb. 33 IN PTR node-K8s-m03.house.cpb. ; K8s Worker 34 IN PTR node-K8s-w01.house.cpb. 35 IN PTR node-K8s-w02.house.cpb. 36 IN PTR node-K8s-w03.house.cpb. 37 IN PTR node-K8s-w04.house.cpb. 38 IN PTR node-K8s-w05.house.cpb. 39 IN PTR node-K8s-w06.house.cpb.

[root@dns-pri ~]# systemctl reload named

Petit Test de résolution sur le node01-k8s-m01

Installation des machines « Master »

Machine RockyLinux

- node-K8s-vip : 172.16.185.30 (IP Virtuelle)

- node-K8s-vip : 172.16.185.40 (IP Virtuelle)

- node-k8s-m01 : 172.16.185.31

- node-k8s-m02 : 172.16.185.32

- node-k8s-m03 : 172.16.185.33

Installer 3 machines sur la configuration suivante

Pool k8s – Machines k8s-Master

1°) Mise à jour RockyLinux 8 (3 master)

[root@node-k8s-m0X ~]# yum –y update && yum upgrade –y

2°) Installation Package/Middleware (sur les 3 master)

[root@node-k8s-m0X ~]# yum install -y qemu-guest-agent wget chrony

3°) Désactiver le swap (3 master)

[root@node-k8s-m0X ~]# swapoff -a [root@node-k8s-m0X ~]# sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

4°) Changer les permissions SELinux (3 master)

[root@node-k8s-m0X ~]# setenforce 0 [root@node-k8s-m0X ~]# sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

5°) Mise en place KeepAlive (3 master)

Installation KeepAlive (3 master)

[root@node-k8s-m0X ~]# yum install -y keepalived [root@node-k8s-m0X ~]# groupadd -r keepalived_script [root@node-k8s-m0X ~]# useradd -r -s /sbin/nologin -g keepalived_script -M keepalived_script [root@node-k8s-m0X ~]# cp /etc/keepalived/keepalived.conf{,-old} [root@node-k8s-m0X ~]# sh -c '> /etc/keepalived/keepalived.conf'

Configurer KeepAlive – node-k8s-m01 – MASTER 01

[root@node-k8s-m01 ~]# vi /etc/keepalived/keepalived.conf

! /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

chris@en-images.info

}

notification_email_from chris@en-images.info

smtp_server localhost

smtp_connect_timeout 30

}

vrrp_script check_server {

script "/etc/keepalived/check_server.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

authentication {

auth_type PASS

auth_pass droopy2021

}

virtual_ipaddress {

172.16.185.30/24

172.16.185.40/24

}

track_script {

check_server

}

}

Configurer KeepAlive – node-k8s-m02 – MASTER 02

[root@node-k8s-m02 ~]# vi /etc/keepalived/keepalived.conf

! /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

chris@en-images.info

}

notification_email_from chris@en-images.info

smtp_server localhost

smtp_connect_timeout 30

}

vrrp_script check_server {

script "/etc/keepalived/check_server.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 99

authentication {

auth_type PASS

auth_pass droopy2021

}

virtual_ipaddress {

172.16.185.30/24

172.16.185.40/24

}

track_script {

check_server

}

}

Configurer KeepAlive – node-k8s-m03 – MASTER 03

[root@node-k8s-m03 ~]# vi /etc/keepalived/keepalived.conf

! /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

chris@en-images.info

}

notification_email_from chris@en-images.info

smtp_server localhost

smtp_connect_timeout 30

}

vrrp_script check_server {

script "/etc/keepalived/check_server.sh"

interval 3

weight -2

fall 10

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface eth0

virtual_router_id 51

priority 98

authentication {

auth_type PASS

auth_pass droopy2021

}

virtual_ipaddress {

172.16.185.30/24

172.16.185.40/24

}

track_script {

check_server

}

}

Script check_server.sh (3 master)

[root@node-k8s-m0X ~]# vi /etc/keepalived/check_server.sh

#!/bin/sh APISERVER_VIP1=192.168.1.30 APISERVER_VIP2=192.168.1.40 APISERVER_DEST_PORT=6443 errorExit() { echo "*** $*" 1>&2 exit 1 } curl --silent --max-time 2 --insecure https://localhost:${APISERVER_DEST_PORT}/ -o /dev/null || errorExit "Error GET https://localhost:${APISERVER_DEST_PORT}/" if ip addr | grep -q ${APISERVER_VIP1}; then curl --silent --max-time 2 --insecure https://${APISERVER_VIP1}:${APISERVER_DEST_PORT}/ -o /dev/null || errorExit "Error GET https://${APISERVER_VIP1}:${APISERVER_DEST_PORT}/" fi if ip addr | grep -q ${APISERVER_VIP2}; then curl --silent --max-time 2 --insecure https://${APISERVER_VIP2}:${APISERVER_DEST_PORT}/ -o /dev/null || errorExit "Error GET https://${APISERVER_VIP2}:${APISERVER_DEST_PORT}/" fi

[root@node-k8s-m0X ~]# chmod +x /etc/keepalived/check_server.sh

Suppression IPv6(3 master)

[root@node-k8s-m0X ~]# echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf

[root@node-k8s-m01 ~]# echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf [root@node-k8s-m01 ~]# echo "net.ipv6.conf.all.autoconf = 0" >> /etc/sysctl.conf [root@node-k8s-m01 ~]# echo "net.ipv6.conf.default.disable_ipv6 = 1" >> /etc/sysctl.conf [root@node-k8s-m01 ~]# echo "net.ipv6.conf.default.autoconf = 0" >> /etc/sysctl.conf [root@node-k8s-m01 ~]# sysctl -p

Rules pour KeepAlive (3 master)

[root@node-k8s-m0X ~]# firewall-cmd --add-rich-rule='rule protocol value="vrrp" accept' --permanent --zone=public [root@node-k8s-m0X ~]# firewall-cmd --reload

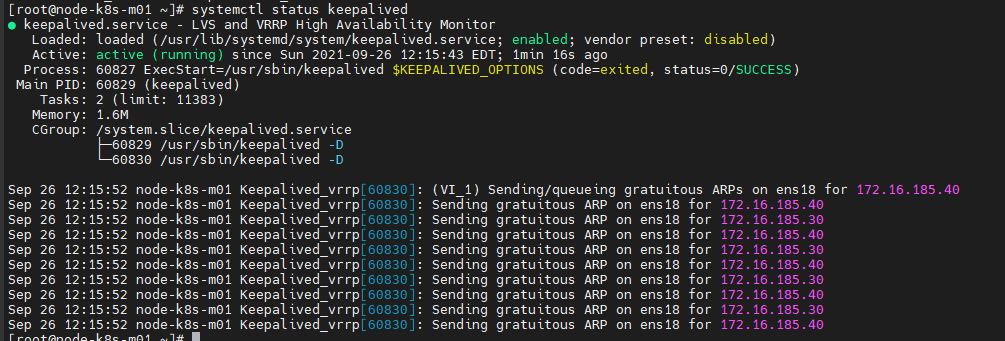

Démarrer le service KeepAlive (3 master)

[root@node-k8s-m0X ~]# systemctl enable keepalived [root@node-k8s-m0X ~]# systemctl start keepalived [root@node-k8s-m0X ~]# systemctl status keepalived

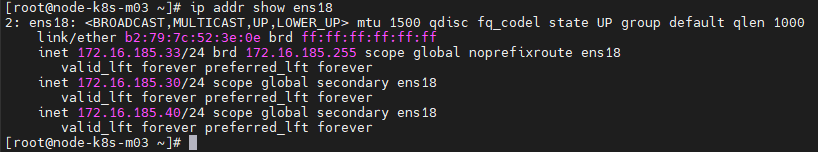

[root@node-k8s-m01 ~]# ip a

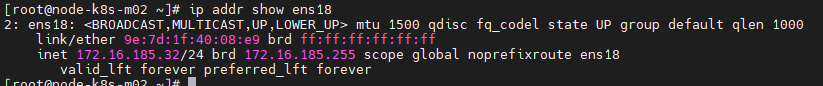

[root@node-k8s-m02 ~]# ip addr show eth0

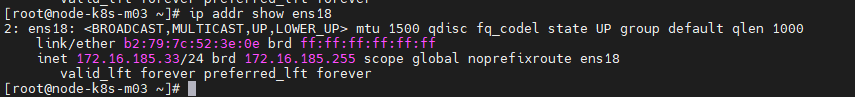

[root@node-k8s-m03 ~]# ip addr show eth0

Test Fonctionnel de KeepAlive

Machine node-K8s-m01 est down, la machine node-k8s-m02 prendra le relais.

[root@node-k8s-m01 ~]# systemctl stop keepalived

[root@node-k8s-m02 ~]# ip addr show eth0

Machine node-k8s-m01 et node-k8s-m02 est down, la machine node-k8s-m03 prendra le relais.

[root@node-k8s-m01 ~]# systemctl stop keepalived

[root@node-k8s-m02 ~]# systemctl stop keepalived

[root@node-k8s-m03 ~]# ip addr show eth0

Machine node-K8s-m01 est UP, elle reprendra les IP Virtuelles.

[root@node-k8s-m01 ~]# systemctl start keepalived [root@node-k8s-m01 ~]# ip addr show eth0

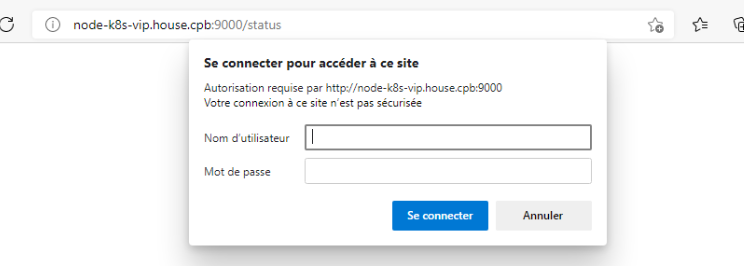

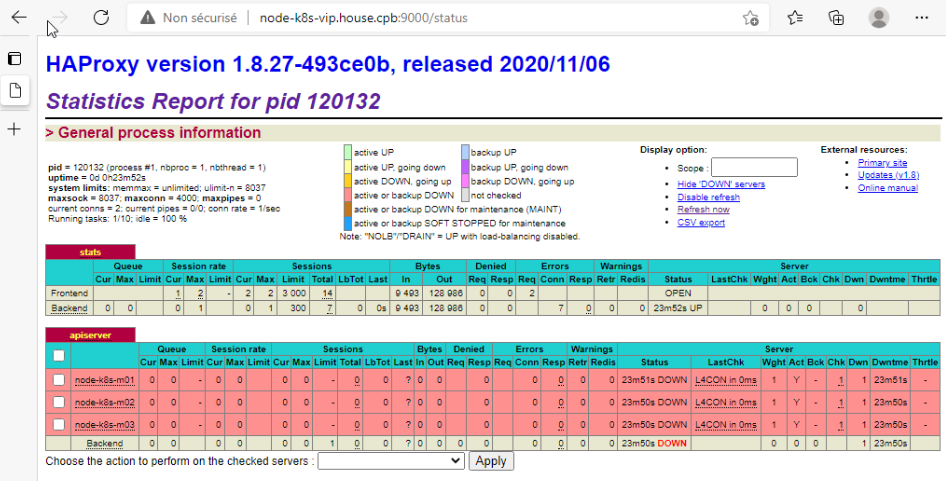

6°) Mise en place HaProxy (3 master)

[root@node-k8s-m0X ~]# yum -y install haproxy [root@node-k8s-m0X ~]# cp /etc/haproxy/haproxy.cfg{,-old} [root@node-k8s-m0X ~]# sh -c '> /etc/haproxy/haproxy.cfg'

[root@node-k8s-m0X ~]# vi /etc/haproxy/haproxy.cfg ############################################ # Partie Global , Default et Site Managemnt ############################################# global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon #description HA Proxy sur l’infrastructure HA K8S Cluster stats socket /var/lib/haproxy/stats defaults mode http log global option dontlognull option http-server-close option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 3000 listen stats bind *:9000 stats enable stats uri /status stats refresh 2s stats auth chris:Chris stats show-legends stats admin if TRUE ############################################### # Partie LoadBalancing , Répartiton de charge ############################################## frontend apiserver bind *:8443 mode tcp option tcplog default_backend apiserver backend apiserver option httpchk GET /healthz http-check expect status 200 mode tcp option ssl-hello-chk balance roundrobin server node-k8s-m01 172.16.185.31:6443 check server node-k8s-m02 172.16.185.32:6443 check server node-k8s-m03 172.16.185.33:6443 check

[root@node-k8s-m0X ~]# haproxy -f /etc/haproxy/haproxy.cfg -c Configuration file is valid

Rules firewall (3 master)

[root@node-k8s-m0X ~]# firewall-cmd --add-port=9000/tcp --zone=public --permanent [root@node-k8s-m0X ~]# firewall-cmd --reload

Démarrer le service HaProxy

[root@node-k8s-m01 ~]# systemctl enable --now haproxy [root@node-k8s-m01 ~]# systemctl status haproxy

- L’accès à l’interface : http://node-k8s-vip.house.cpb:9000/status

Login/password est celui défini dans le fichier de configuration haproxy.cfg

Pour le moment les machines du BackEnd « ApiServer » est DOWN car le service API de Kubernetes n’est pas encore installé.

7°) Installation de Docker, Kubeadm, kubelet,kubectl (3 master)

Installation Docker

[root@node-k8s-m0X ~]# yum install -y yum-utils [root@node-k8s-m0X ~]# yum install -y iproute [root@node-k8s-m0X ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo Adding repo from: https://download.docker.com/linux/centos/docker-ce.repo [root@node-k8s-m0X ~]# yum -y install docker-ce

[root@node-k8s-m0X ~]# systemctl enable docker --now [root@node-k8s-m0X ~]# docker -v Docker version 20.10.8, build 3967b7d

Installation Kubeadm, kubelet et kubectl

[root@node-k8s-m0X ~]# vi /etc/yum.repos.d/kubernetes.repo

[kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl

[root@node-k8s-m0X ~]# yum -y update [root@node-k8s-m0X ~]# yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

Modifier cgroupdriver pour Docker

[root@node-k8s-m0X ~]# docker info | grep -i cgroup Cgroup Driver: cgroupfs Cgroup Version: 1

[root@node-k8s-m0X ~]# vi /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS=--cgroup-driver=cgroupfs

Rules Firewall

[root@node-k8s-m0X ~]# firewall-cmd --permanent --add-port=6443/tcp [root@node-k8s-m0X ~]# firewall-cmd --permanent --add-port=2379-2380/tcp [root@node-k8s-m0X ~]# firewall-cmd --permanent --add-port={8443,10250,10251,10252,179}/tcp [root@node-k8s-m0X ~]# firewall-cmd --add-port={4789,9099}/tcp --permanent [root@node-k8s-m0X ~]# firewall-cmd --permanent --add-port=4789/udp [root@node-k8s-m0X ~]# firewall-cmd --add-masquerade --permanent [root@node-k8s-m01X ~]# firewall-cmd --reload

Rules Réseaux

[root@node-k8s-m01 ~]# modprobe br_netfilter [root@node-k8s-m01 ~]# echo "br_netfilter" > /etc/modules-load.d/br_netfilter.conf [root@node-k8s-m01 ~]# sh -c "echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables" [root@node-k8s-m01 ~]# sh -c "echo '1' > /proc/sys/net/ipv4/ip_forward" [root@node-k8s-m01 ~]# systemctl enable kubelet.service [root@node-k8s-m01 ~]# systemctl restart firewalld

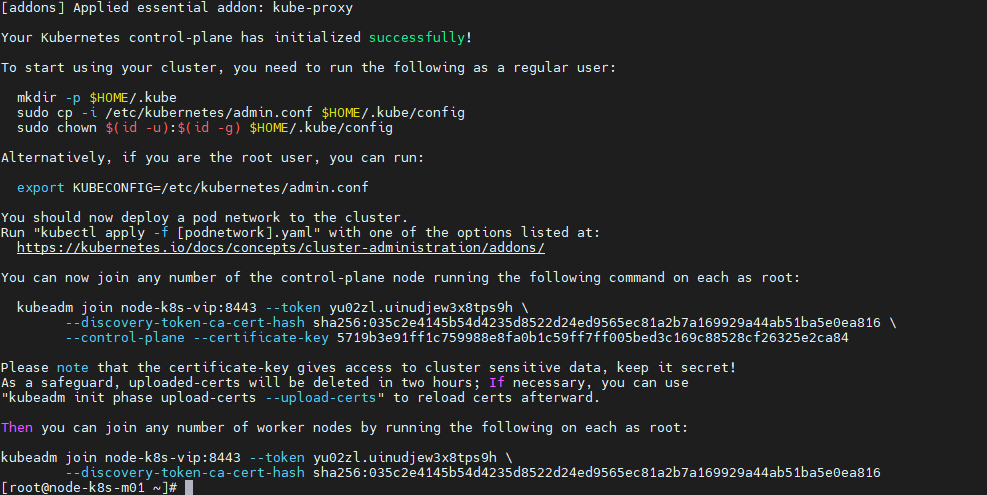

8°) Initialisation du cluster K8S (3 master)

Initialisation du Cluster (node-k8s-m01)

[root@node-k8s-m01 ~]# kubeadm init --control-plane-endpoint node-k8s-vip:8443 --upload-certs --pod-network-cidr=10.40.0.0/16

Copier les Clef dans un notepad pour les masters et les Worker

kubeadm join node-k8s-vip:8443 --token yu02zl.uinudjew3x8tps9h \ --discovery-token-ca-cert-hash sha256:035c2e4145b54d4235d8522d24ed9565ec81a2b7a169929a44ab51ba5e0ea816 \ --control-plane --certificate-key 5719b3e91ff1c759988e8fa0b1c59ff7ff005bed3c169c88528cf26325e2ca84 Please note that the certificate-key gives access to cluster sensitive data, keep it secret! As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use "kubeadm init phase upload-certs --upload-certs" to reload certs afterward. Then you can join any number of worker nodes by running the following on each as root: kubeadm join node-k8s-vip:8443 --token yu02zl.uinudjew3x8tps9h \ --discovery-token-ca-cert-hash sha256:035c2e4145b54d4235d8522d24ed9565ec81a2b7a169929a44ab51ba5e0ea816

[root@node-k8s-m01 ~]# mkdir -p $HOME/.kube [root@node-k8s-m01 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@node-k8s-m01 ~]# chown $(id -u):$(id -g) $HOME/.kube/config [root@node-k8s-m01 ~]# curl https://docs.projectcalico.org/manifests/calico.yaml -O [root@node-k8s-m01 ~]# kubectl apply -f calico.yaml

[root@node-k8s-m01 ~]# kubectl get nodes node-k8s-m01 Ready control-plane,master 27m v1.22.2

Ajouter le node-k8s-m02 au Cluster

[root@node-k8s-m02 ~]# vi /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS=--cgroup-driver=cgroupfs

[root@node-k8s-m02 ~]# kubeadm join node-k8s-vip:8443 --token yu02zl.uinudjew3x8tps9h \ --discovery-token-ca-cert-hash sha256:035c2e4145b54d4235d8522d24ed9565ec81a2b7a169929a44ab51ba5e0ea816 \ --control-plane --certificate-key 5719b3e91ff1c759988e8fa0b1c59ff7ff005bed3c169c88528cf26325e2ca84

[root@node-k8s-m02 ~]# mkdir -p $HOME/.kube [root@node-k8s-m02 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@node-k8s-m02 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

root@node-k8s-m01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-k8s-m01 Ready control-plane,master 176m v1.22.2 node-k8s-m02 Ready control-plane,master 137m v1.22.2

Ajouter le node-k8s-m03 au Cluster

[root@node-k8s-m03 ~]# vi /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS=--cgroup-driver=cgroupfs

[root@node-k8s-m03 ~]# kubeadm join node-k8s-vip:8443 --token yu02zl.uinudjew3x8tps9h \ --discovery-token-ca-cert-hash sha256:035c2e4145b54d4235d8522d24ed9565ec81a2b7a169929a44ab51ba5e0ea816 \ --control-plane --certificate-key 5719b3e91ff1c759988e8fa0b1c59ff7ff005bed3c169c88528cf26325e2ca84

[root@node-k8s-m03 ~]# mkdir -p $HOME/.kube [root@node-k8s-m03 ~]# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [root@node-k8s-m03 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@node-k8s-m01 ~]# kubectl get nodes NAME STATUS ROLES AGE VERSION node-k8s-m01 Ready control-plane,master 45m v1.22.2 node-k8s-m02 Ready control-plane,master 6m55s v1.22.2 node-k8s-m03 Ready control-plane,master 77s v1.22.2

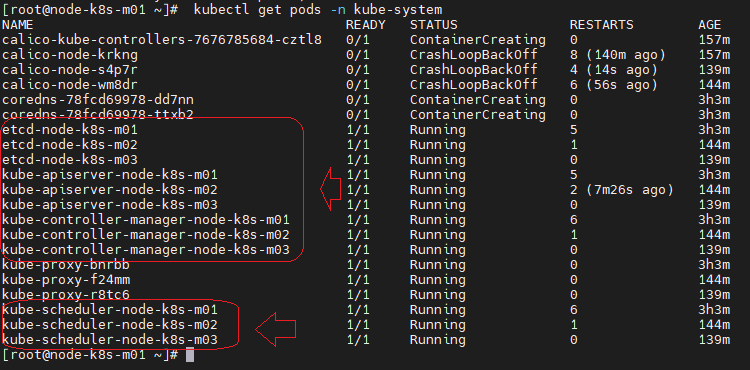

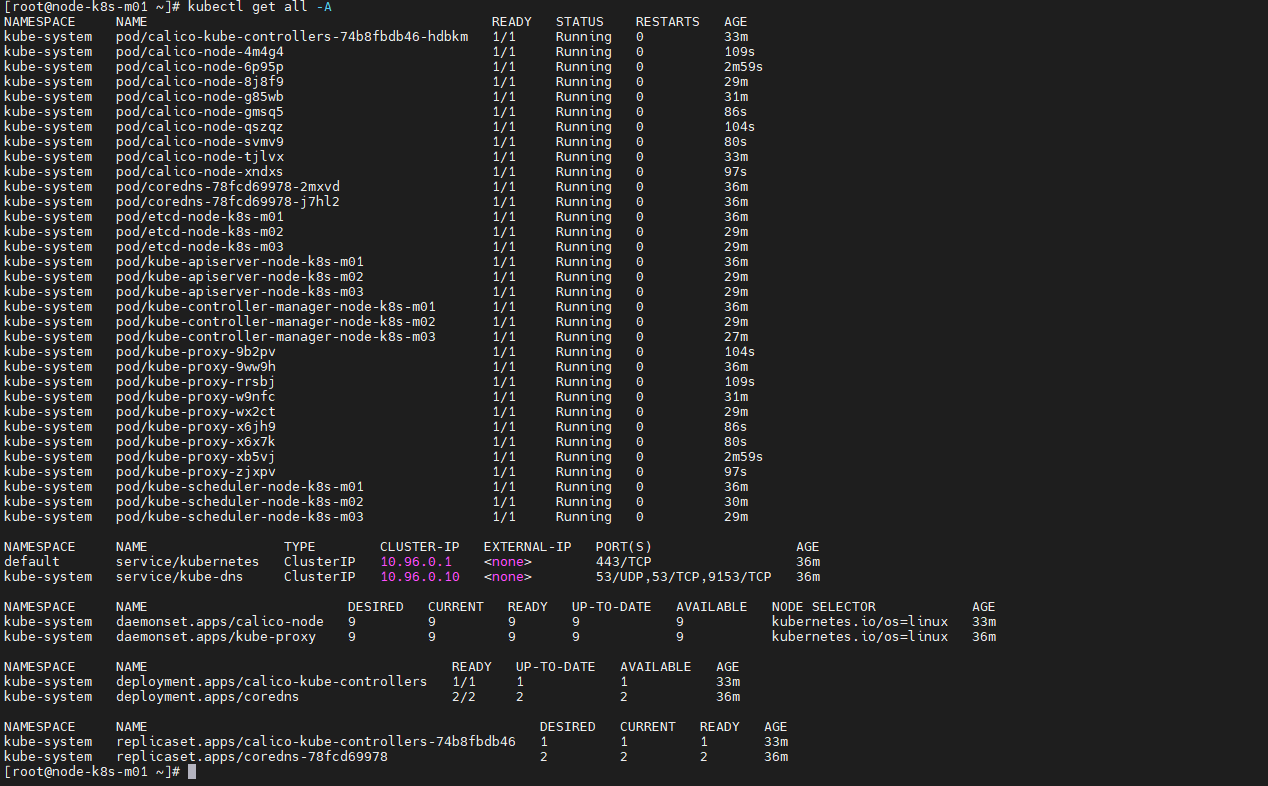

[root@node-k8s-m01 ~]# kubectl get pods -n kube-system

Installation des machines « Worker»

Machine RockyLinux

- node-k8s-w01 : 172.16.185.34

- node-k8s-w02 : 172.16.185.35

- node-k8s-w03 : 172.16.185.36

- node-k8s-w04 : 172.16.185.37

- node-k8s-w05 : 172.16.185.38

- node-k8s-w06 : 172.16.185.39

Installer 6 machines sur la configuration suivante

Pool k8s – Machines k8s-worker

1°) Mise à jour RockyLinux 8 (6 Worker)

[root@node-k8s-w0X ~]# yum -y update && yum -y upgrade

2°) Installation Package/Middleware (6 Worker)

[root@node-k8s-w0X ~]# yum install -y qemu-guest-agent wget chrony

3°) Désactiver le swap (6 Worker)

[root@node-k8s-w0X ~]# swapoff -a [root@node-k8s-w0X ~]# sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

4°) Changer les permissions SELinux (6 Worker)

[root@node-k8s-w0X ~]# setenforce 0 [root@node-k8s-w0X ~]# sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

5°) Installation Docker (6 Worker)

[root@node-k8s-w0X ~]# yum install -y yum-utils [root@node-k8s-w0X ~]# yum install -y iproute

[root@node-k8s-w0X ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo Adding repo from: https://download.docker.com/linux/centos/docker-ce.repo [root@node-k8s-w0X ~]# yum -y install docker-ce

[root@node-k8s-w0X ~]# systemctl enable --now docker [root@node-k8s-w0X ~]# docker -v Docker version 20.10.8, build 3967b7d

6°) Installation Kubeadm, kubelet et kubectl (6 Worker)

[root@node-k8s-w0X ~]# vi /etc/yum.repos.d/kubernetes.repo

[kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl

[root@node-k8s-m0X ~]# yum -y update [root@node-k8s-m0X ~]# yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes [root@node-k8s-w0X ~]# systemctl enable kubelet.service

7°) Rules Firewall(6 Worker)

[root@node-k8s-w0X ~]# firewall-cmd --permanent --add-port=30000-32767/tcp [root@node-k8s-w0X ~]# firewall-cmd --permanent --add-port={10250,179}/tcp [root@node-k8s-w0X ~]# firewall-cmd --permanent --add-port=4789/udp [root@node-k8s-w0X ~]# firewall-cmd --add-port={4789,9099}/tcp –permanent [root@node-k8s-w0X ~]# firewall-cmd --add-masquerade –permanent

8°) Rules Applicatifs (6 Worker)

[root@node-k8s-w0X ~]# firewall-cmd --permanent --add-port=80/tcp [root@node-k8s-w0X ~]# firewall-cmd --reload

9°) Ajout Règle Bridge pour le réseau Interne k8s(6 Worker)

[root@node-k8s-w01 ~]# modprobe br_netfilter [root@node-k8s-w01 ~]# echo "net.ipv4.ip_nonlocal_bind = 1" >> /etc/sysctl.conf [root@node-k8s-w01 ~]# echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf [root@node-k8s-w01 ~]# echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf [root@node-k8s-w01 ~]# sh -c "echo '1' > /proc/sys/net/bridge/bridge-nf-call-iptables" [root@node-k8s-w01 ~]# sh -c "echo '1' > /proc/sys/net/ipv4/ip_forward"

10°) Suppression IPv6(6 Worker)

[root@node-k8s-m01 ~]# echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf [root@node-k8s-m01 ~]# echo "net.ipv6.conf.all.autoconf = 0" >> /etc/sysctl.conf [root@node-k8s-m01 ~]# echo "net.ipv6.conf.default.disable_ipv6 = 1" >> /etc/sysctl.conf [root@node-k8s-m01 ~]# echo "net.ipv6.conf.default.autoconf = 0" >> /etc/sysctl.conf [root@node-k8s-m01 ~]# sysctl -p

11°) Ajouter les node-k8s-w0x au Cluster(6 Worker)

[root@node-k8s-w0X~]# vi /etc/sysconfig/kubelet KUBELET_EXTRA_ARGS=--cgroup-driver=cgroupfs [root@node-k8s-w01 ~]# kubeadm join node-k8s-vip:8443 --token yu02zl.uinudjew3x8tps9h \ --discovery-token-ca-cert-hash sha256:035c2e4145b54d4235d8522d24ed9565ec81a2b7a169929a44ab51ba5e0ea816

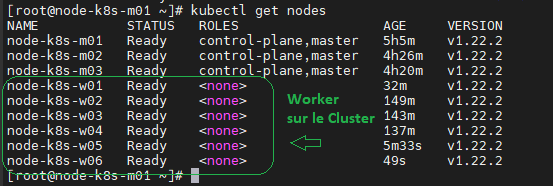

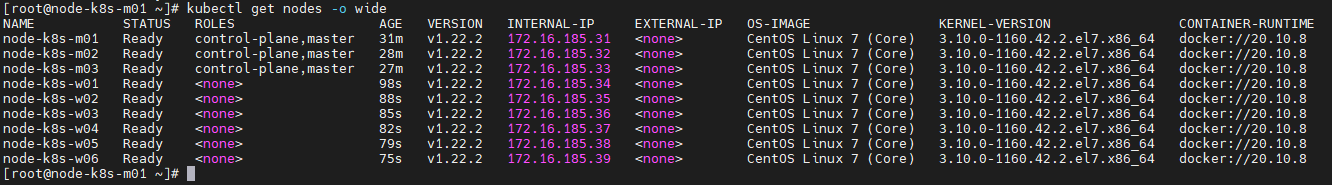

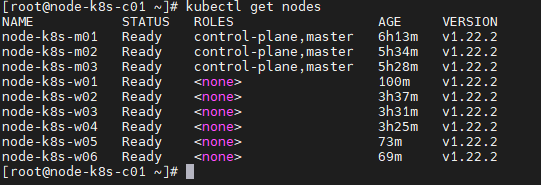

Check des Worker sur le Cluster master01

[root@node-k8s-m01 ~]# kubectl get nodes

[root@node-k8s-m01 ~]# kubectl get nodes -o wide

[root@node-k8s-m01 ~]# kubectl get all -A

Côté HaProxy

Installation d’un client « DevOP » pour les tests

Machine RockyLinux

- node-k8s-c01 : 172.16.185.41

1°) Mise à jour RockyLinux 8

[root@node-k8s-c01 ~]# yum -y update && yum -y upgrade

2°) Installation Package/Middleware

[root@node-k8s-c01 ~]# yum install -y qemu-guest-agent wget chrony

3° ) Désactiver le swap

[root@node-k8s-c01 ~]# swapoff -a [root@node-k8s-c01 ~]# sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

4°) Changer les permissions SELinux

[root@node-k8s-c01 ~]# setenforce 0 [root@node-k8s-c01 ~]# sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

5°) Installation kubectl

[root@node-k8s-c01 ~]# vi /etc/yum.repos.d/kubernetes.repo

[kubernetes] name=Kubernetes baseurl=https://packages.cloud.google.com/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=1 repo_gpgcheck=1 gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpg https://packages.cloud.google.com/yum/doc/rpm-package-key.gpg exclude=kubelet kubeadm kubectl

[root@node-k8s-c01 ~]# yum install -y kubectl --disableexcludes=kubernetes

6°) Transfert de la Conf Master du Cluster sur le client DEV

[root@node-k8s-c01 ~]# mkdir -p $HOME/.kube [root@node-k8s-c01 ~]# scp root@node-k8s-m01:/etc/kubernetes/admin.conf $HOME/.kube/config [root@node-k8s-c01 ~]# chown $(id -u):$(id -g) $HOME/.kube/config

[root@node-k8s-c01 ~]# kubectl get nodes

7°) Déployer une image Apache via NodePort

[root@node-k8s-c01 ~]# kubectl create deployment serv1 --image=httpd deployment.apps/serv1 created

[root@node-k8s-c01 ~]# kubectl get deployments.apps serv1 NAME READY UP-TO-DATE AVAILABLE AGE serv1 1/1 1 1 76s

[root@node-k8s-c01 ~]# kubectl get pods NAME READY STATUS RESTARTS AGE serv1-f684bf4bf-lhthx 1/1 Running 0 57s

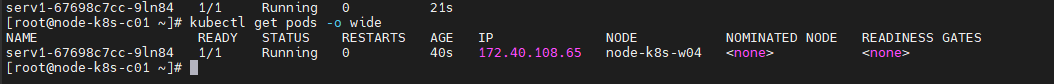

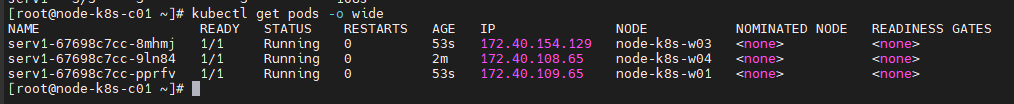

[root@node-k8s-c01 ~]# kubectl get pods -o wide

8°) Ajout de réplica pour Augmenter la résilience du service Apache

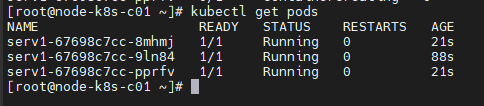

[root@node-k8s-c01 ~]# kubectl scale deployment serv1 --replicas=3 deployment.apps/serv1 scaled

[root@node-k8s-c01 ~]# kubectl get pods

[root@node-k8s-c01 ~]# kubectl get deployments.apps serv1 NAME READY UP-TO-DATE AVAILABLE AGE serv1 3/3 3 3 3m56s

[root@node-k8s-c01 ~]# kubectl get pods -o wide

[root@node-k8s-c01 ~]# kubectl expose deployment serv1 --name=serv1 --type=NodePort --port=80 --target-port=80 service/serv1 exposed

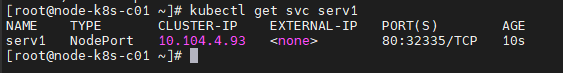

[root@node-k8s-c01 ~]# kubectl get svc serv1

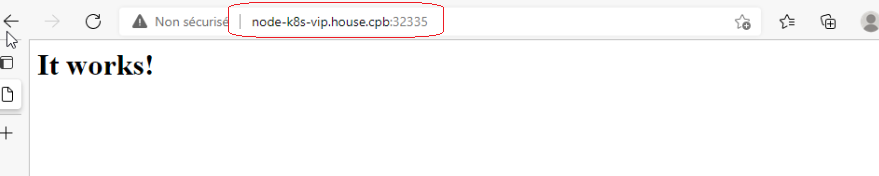

9°) Test d’accès au service serv1 via le LoadBalancing « node-k8s-vip »

[root@node-k8s-c01 ~]# curl http://node-k8s-vip:32335 <html><body><h1>It works!</h1></body></html>

10°) Test d’accès au service serv1 via les node « Master » et « Worker »

Sur les Masters

[root@node-k8s-c01 ~]# curl http://node-k8s-m01:32335 <html><body><h1>It works!</h1></body></html>

[root@node-k8s-c01 ~]# curl http://node-k8s-m02:32335 <html><body><h1>It works!</h1></body></html>

[root@node-k8s-c01 ~]# curl http://node-k8s-m03:32335 <html><body><h1>It works!</h1></body></html>

Sur les Workers

[root@node-k8s-c01 ~]# curl http://node-k8s-w01:32335 <html><body><h1>It works!</h1></body></html>

[root@node-k8s-c01 ~]# curl http://node-k8s-w02:32335 <html><body><h1>It works!</h1></body></html>

[root@node-k8s-c01 ~]# curl http://node-k8s-w03:32335 <html><body><h1>It works!</h1></body></html>

[root@node-k8s-c01 ~]# curl http://node-k8s-w04:32335 <html><body><h1>It works!</h1></body></html>

[root@node-k8s-c01 ~]# curl http://node-k8s-w05:32335 <html><body><h1>It works!</h1></body></html>

[root@node-k8s-c01 ~]# curl http://node-k8s-w06:32335 <html><body><h1>It works!</h1></body></html>

Le service httpd est accessible via tous les workers , Master et IP Vip.

Views: 12